How to Handle Pagination in Web Scraping using Python

Web scraping is an essential tool for developers, data analysts, and SEO professionals. Whether it's gathering competitor insights or compiling datasets, scraping often involves navigating through multiple pages of data—a process known as pagination. But as useful as pagination is for user experience, it can pose significant challenges in web scraping.

If you've struggled with collecting data from multiple-page websites or want to master the art of handling pagination effectively, you're in the right place. This guide will walk you through the fundamentals of pagination in web scraping, the challenges involved, and actionable steps for efficiently handling it.

What is Pagination?

Pagination is a technique used by websites to divide content across multiple pages. Rather than loading all data at once, websites use pagination to improve loading speeds and enhance the user experience. For web scrapers, it presents an additional challenge—looping through multiple pages to retrieve all the data.

Common Methods of Pagination

- Numbered Pagination: This is the standard approach where pages are accessible via numbered links, often accompanied by "Next" and "Previous" buttons. Examples can be found on e-commerce websites, blogs, or search engine result pages.

- Infinite Scrolling: This type dynamically loads data as the user scrolls down, common in websites like social media feeds or online news portals (e.g., Instagram or BuzzFeed).

- Load More Button: Instead of separate pages or automatic infinite scrolling, users click a Load More button to fetch more content (seen on websites like Pinterest).

Understanding the type of pagination used is crucial for designing an effective web scraper.

Challenges of Pagination in Web Scraping

While pagination organizes content for users, it complicates life for web scrapers. Here are the most common issues you’ll encounter:

- Dynamic Loading: Websites using JavaScript to load data (e.g., infinite scrolling) can be difficult to scrape since rendering happens on the client side, not in the raw HTML source.

- Session Dependencies: Some websites track sessions to limit access and may block your scraper after repetitive requests.

- Complex Navigation: Pagination isn't always linear. Some websites use hidden or less intuitive mechanisms to create pagination URLs.

- Data Duplication: Failure to handle pagination correctly can result in duplicated or incomplete data.

Prerequisites

To follow this guide and implement the pagination scraping examples, ensure you have the following Python libraries installed:

- Requests: For handling HTTP requests in numbered pagination.

- BeautifulSoup (bs4): For parsing and extracting data from HTML content in numbered pagination.

- Playwright: For rendering JavaScript and interacting with dynamic content in infinite scrolling and "Load More" button examples.

pip install requests beautifulsoup4 playwright

playwright installHandle Numbered Pagination

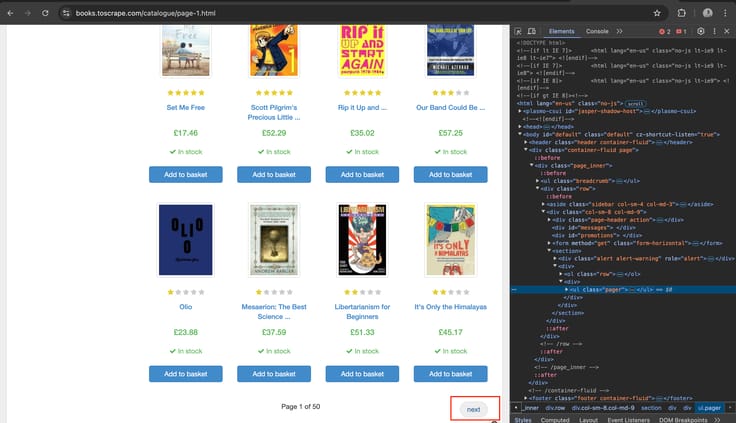

To scrape data from a website using numbered pagination, the goal is to navigate through each page sequentially until no further pages exist. In the example website, Books to Scrape, the pagination mechanism includes a "Next" button within a <ul> element of class pager. The Next button contains an <a> tag with an href attribute pointing to the URL of the next page.

Here’s how this button looks on the website along with its HTML structure:

The scraping logic is as follows:

- Start from the base URL (https://books.toscrape.com/).

- Parse the current page's content using BeautifulSoup to extract the data of interest.

- Check for the presence of the

<li>element with the classnext. If it exists, extract the href attribute of the nested<a>tag to determine the next page's URL. - Concatenate the next page's relative URL with the base URL to form the absolute URL.

- Repeat the process until the Next button is no longer found, indicating the last page.

Below is the Python code that implements this logic using the requests library for HTTP requests and BeautifulSoup for parsing HTML content.

import requests

from bs4 import BeautifulSoup

from urllib.parse import urljoin

# Base URL of the website

base_url = "https://books.toscrape.com/catalogue/"

current_url = base_url + "page-1.html"

while current_url:

# Fetch the content of the current page

response = requests.get(current_url)

soup = BeautifulSoup(response.text, "html.parser")

# Extract the desired data (e.g., book titles)

books = soup.find_all("h3")

for book in books:

title = book.a["title"] # Extract book title

print(title)

# Find the "Next" button

next_button = soup.find("li", class_="next")

if next_button:

# Get the relative URL and form the absolute URL

next_page = next_button.a["href"]

current_url = urljoin(base_url, next_page)

else:

# No "Next" button found, end the loop

current_url = None

This script starts at the first page, scrapes the book titles, and navigates to the next page until the Next button is no longer available. The urljoin function ensures the next page's relative URL is correctly appended to the base URL.

Handle Infinite Scrolling

Infinite scrolling is a pagination method where additional content is loaded dynamically as the user scrolls down the page. This requires handling JavaScript rendering because the content is fetched and appended to the page dynamically, often through AJAX requests. Unlike numbered pagination, where URLs explicitly point to new pages, infinite scrolling relies on JavaScript to manage content loading.

For this reason, static libraries like requests cannot handle infinite scrolling because they do not execute JavaScript. Instead, we use libraries such as Playwright or Selenium that support JavaScript rendering. These libraries allow us to programmatically simulate user interactions like scrolling, enabling the loading of new content.

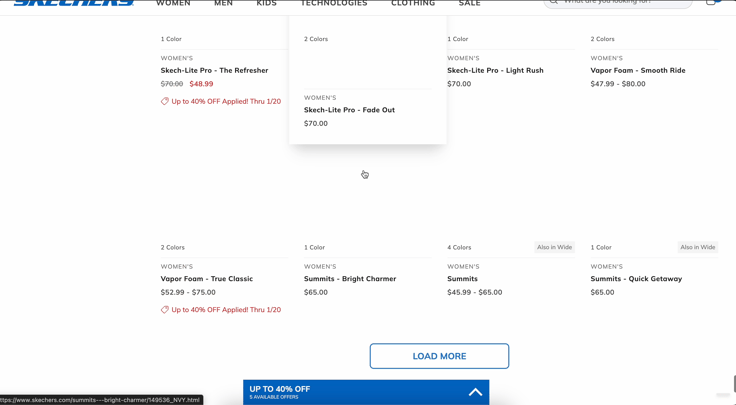

In the case of the Skechers website, infinite scrolling is triggered by scrolling to the bottom of the page. Even though at the end of the page there is a “Load More” button, it will load the data without sclicking it but just by scrolling.

The scraping logic is as follows:

- Start by opening the target URL in a Playwright browser instance.

- Use a loop to scroll to the bottom of the page and wait for new content to load.

- Monitor the page for changes to ensure that additional content has been loaded.

- Stop scrolling once no new content is detected, or when a specific condition (e.g., max scroll attempts) is met.

Below is the Python code to demonstrate handling infinite scrolling with Playwright.

from playwright.sync_api import sync_playwright

# Target URL

url = "https://www.skechers.com/women/shoes/athletic-sneakers/?start=0&sz=84"

# Initialize Playwright

with sync_playwright() as p:

browser = p.chromium.launch(headless=False) # Launch browser (set headless=True for no UI)

page = browser.new_page()

page.goto(url, wait_until="domcontentloaded")

# Define a function to scroll to the bottom and wait for content to load

def scroll_infinite(page, max_attempts=10):

previous_height = 0

attempts = 0

while attempts < max_attempts:

# Scroll to the bottom of the page

page.evaluate("window.scrollTo(0, document.body.scrollHeight)")

# Wait for new content to load

page.wait_for_timeout(3000) # Adjust timeout as needed

# Check the new page height

new_height = page.evaluate("document.body.scrollHeight")

if new_height == previous_height:

# If height doesn't change, assume no new content is loading

break

previous_height = new_height

attempts += 1

# Scroll through the page to trigger content loading

scroll_infinite(page)

# Close the browser

browser.close()

This script demonstrates how to use Playwright to handle infinite scrolling. The scroll_infinite function simulates scrolling to the bottom of the page multiple times, pausing to allow new content to load. The script terminates when no additional content is detected or when the maximum number of scroll attempts is reached.

This approach ensures that all dynamically loaded content is rendered and ready for extraction if needed.

Handling “Load More” Button

"Load More" pagination dynamically appends new content to the existing page when the user clicks a button. This method is often powered by JavaScript and requires rendering capabilities to simulate user interaction with the "Load More" button.

Unlike infinite scrolling, where content loads automatically, scraping "Load More" pagination involves programmatically clicking the button until it is no longer present or functional. Static libraries like requests in most cases cannot handle this because they do not support JavaScript execution. For this task, we will use Playwright, which supports JavaScript rendering and allows us to interact with elements on the page.

The scraping logic for the ASOS website is as follows:

- Start by opening the target URL in a Playwright browser instance.

- Locate the "Load More" button using its HTML attributes (e.g.,

class nameordata-auto-id). - Click the button to load more products.

- Repeat the process until the button is no longer available or functional.

- Optionally, extract the dynamically loaded content at each iteration.

Below is the Python code to handle this type of pagination using Playwright.

from playwright.sync_api import sync_playwright

# Target URL

url = "https://www.asos.com/men/new-in/new-in-clothing/cat/?cid=6993"

# Initialize Playwright

with sync_playwright() as p:

browser = p.chromium.launch(headless=False) # Launch browser (set headless=True for no UI)

page = browser.new_page()

page.goto(url)

# Define a function to interact with the "Load More" button

def load_more_content(page, max_clicks=10):

clicks = 0

while clicks < max_clicks:

try:

# Locate the "Load More" button

load_more_button = page.locator('a[data-auto-id="loadMoreProducts"]')

if not load_more_button.is_visible():

print("No more content to load.")

break

# Click the "Load More" button

load_more_button.click()

# Wait for new content to load

page.wait_for_timeout(3000) # Adjust timeout as needed

clicks += 1

print(f"Clicked 'Load More' button {clicks} time(s).")

except Exception as e:

print(f"Error interacting with 'Load More' button: {e}")

break

# Trigger loading more content

load_more_content(page)

# Close the browser

browser.close()

Explanation

- Locating the Button: The

locator()method is used to find the "Load More" button using thedata-auto-idattribute (data-auto-id="loadMoreProducts"). - Clicking the Button: The

click()method simulates a user click on the button. - Waiting for Content: The script waits for a short timeout (

3000 ms) to allow new content to load. - Stopping Conditions: The loop stops when the "Load More" button is no longer visible or the maximum number of clicks (

max_clicks) is reached.

Conclusion

Handling pagination is a fundamental skill in web scraping, as most websites distribute their data across multiple pages to improve performance and user experience. In this guide, we explored three common types of pagination—numbered pagination, infinite scrolling, and load more buttons—and demonstrated how to handle each using Python.

- Numbered Pagination: Using static scraping libraries like requests and BeautifulSoup, we navigated sequentially through pages by analyzing the URL structure and detecting "Next" buttons.

- Infinite Scrolling: Leveraging Playwright, we simulated scrolling to dynamically load content, showcasing the importance of JavaScript-rendering libraries for such tasks.

- Load More Button: Again using Playwright, we programmatically interacted with the "Load More" button, demonstrating how to trigger content loading until all data is retrieved.

Key Takeaways:

- The type of pagination used on a website determines the tools and techniques required for scraping. Static libraries like requests work well for numbered pagination, while dynamic content demands tools like Playwright or Selenium.

- Properly inspecting and understanding the website's structure using browser DevTools is crucial for designing effective scraping logic.

- Always adhere to a website’s

robots.txtfile and scraping policies to ensure ethical and responsible data collection.

Understanding these techniques equips you to handle various web scraping challenges. With these skills, you can extract valuable data from websites with complex pagination mechanisms, opening up new possibilities for data-driven projects.