Challenges of Data Collection: Important Things in 2025

“Data is a precious thing and will last longer than the systems themselves.” Tim Berners-Lee, the world wide web inventor, said the above quote about data. Today, our world is undergoing many changes due to rapid technological development. From integrating machine learning algorithms in chat systems to mimic human response to implementing AI in medical

“Data is a precious thing and will last longer than the systems themselves.”

Tim Berners-Lee, the world wide web inventor, said the above quote about data. Today, our world is undergoing many changes due to rapid technological development. From integrating machine learning algorithms in chat systems to mimic human response to implementing AI in medical surgery that saves lives, technology paves an excellent way for us to become an advanced civilization. You need a tool in order to develop and evolve new and old technologies, respectively. That tool is “data.” Do you know Google almost process about 200 petabytes of data every day?

Organizations invest a lot of resources to procure precious data. It is safe to say that information is better than any resource on Earth, and this can be proven with the acts being carried out in the current situation, which is NFT (Non-Fungible Tokens). Collecting data is not an easy task. There are ways to procure data, but several challenges are involved. We will briefly examine data and its impact in the upcoming block and dive into some data collection challenges.

Feel free to jump to any sections to learn more about the challenges of data collection!

What Is Data and Data Collection?

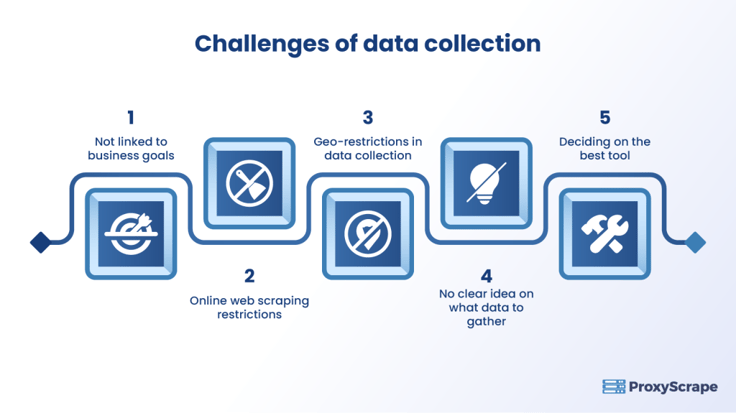

Challenges of Data Collection:

Challenge 1: The Data Collection Process Is Not Linked to Business Goals:

Challenge 2: Online Web Scraping Restrictions:

Challenge 3: Geo-Restrictions in Data Collection:

Challenge 4: No Clear Idea on What Data to Gather:

Challenge 5: Deciding on the Best Tool for Web Scraping:

How Does a Proxy Server Help with Web Scraping?

Which Is the Better Proxy Server for Web Scraping?

What Is Data and Data Collection?

In simple terms, data is a collection of facts (checked or unchecked) in an unorganized manner. For example, in the stock market, the future stock price for a particular company is predicted based on that specific company’s previous and current stock price. The last and current stock prices act as the “data.” Accumulating data (the stock price for a specific quarter) in an organized manner is called “information.”

So, to recap, data is a collection of facts, and information is a collection of data.

Data collection is gathering data from various sources online and offline. It is mainly carried out online. The primary objective of data collection is to provide enough information in order to make a business decision, research, and various in-company purposes that directly and indirectly make people’s lives better. The most famous way of collecting data online is “web scraping.”

Usually, in any business, data collection happens on multiple levels. For example, prominent data engineers use data from their data lakes (repositories exclusive to that particular company) and sometimes gather data from other sources using web scraping. IT departments may collect data about their clients, customers, sales, profits, and other business factors. The HR department may conduct surveys about employees or the current situation within and outside of the company.

Now, let us see the challenges involved in collecting data online.

Challenges of Data Collection:

Many organizations face the challenge of getting quality and structured data online. Not only that, but organizations are also looking for the most consistent data. Companies like Meta, Google, Amazon, etc., have silos that contain petabytes of data. What about small companies or Kickstarters? Their only way to get data outside their repository is through online data scraping. You need an iron-clad data collection practices system for efficient web scraping. First, you must know the barriers to efficient and consistent data collection.

Challenge 1: The Data Collection Process Is Not Linked to Business Goals:

A business focusing on timely delivery will likely obtain compromised quality and inconsistent data. That is because those businesses do not focus on administrative data that can be collected as the by-product of some action.

For example, you can perform some tasks only with the email address of the client/employee without knowing any information about that particular client or employee. Instead of focusing on the task at hand, it is necessary to widen the horizon and check for the probability of data usage. This can result in obtaining a narrow range of data with only one purpose. Businesses should include data collection as a core process and look for data with more than one use, such as research and monitoring.

Challenge 2: Online Web Scraping Restrictions:

Web scraping is the process of getting data online from various sources, such as blogs, eCommerce websites, and even video streaming platforms, for multiple purposes, such as SEO monitoring and competitor analysis. Even though web scraping is considered legal, it is still in the grey area. Scraping large amounts of data (in terms of size) may harm the source, slow down the webpage, or use data for unethical purposes. Some documents act as guidelines on how to perform web scraping, but that varies based on the type of business and website. There is no tangible way to know how, when, and what to web scrape from a website.

Challenge 3: Geo-Restrictions in Data Collection:

As a business, your priority is to convert the overseas audience into your customer. To do that, you need to have excellent visibility worldwide, but some governments and businesses impose restrictions on data collection for security reasons. There are ways to overcome this, but overseas data may be inconsistent, irrelevant, and tedious compared to gathering local data. To get data efficiently, you must know where you want to scrap your data, which can be problematic given that Google processes about 20 petabytes of data daily. Without an efficient tool, you will be spending a lot of money just to gather data that may or may not be relevant to your business.

Challenge 4: No Clear Idea on What Data to Gather:

Imagine you are responsible for collecting data about people who survived the Titanic incident. Usually, you start collecting data, such as age or where they are coming from. You have collected the data and are instructed to inform the family of the survivors and the deceased. You collected all the data except for the names of the dead, and there is no other way to inform the family of people who lost their lives. In our scenario, leaving out essential data, such as names, is impossible. In real-world situations, there is a possibility.

There are a lot of factors involved in collecting data online. You must clearly understand what type of data you are collecting and what is necessary for your business.

Challenge 5: Deciding on the Best Tool for Web Scraping:

As mentioned above, an efficient way to collect data online is through web scraping, but various web scraping tools are available online. Also, you can create your programming script with the help of the python programming language. So, deciding which is the best tool for your requirements is difficult. Remember that your chosen instrument must also be able to process secondary data, meaning that it should be integrated with the core process of your business.

With this requirement, the best choice is to go with online tools. Yes, your programming script can customize your tools based on your needs. Today’s web scraping tools have several features that allow you to customize your options and scrape the data you need. This helps to save a lot of time and internet bandwidth.

As you can see, there are many restrictions for data collection online, out of which two concerns are: how to scrape data online effectively and which tool is the best tool to use for web scraping.

To effectively scrape data online without issues, the best solution is to implement a proxy server and any online web scraping tool.

Proxy Server – What Is It?

A proxy server is an intermediary server that sits between you (the client) and online (the target server). Instead of directly routing your internet traffic to the target server, it will redirect your internet traffic to its server, finally giving it to the target server. Rerouting internet traffic helps you mask your IP address and can make you anonymous online. You can use proxies for various online tasks, such as accessing geo-restricted content, accessing the streaming website, performing web scraping, and other high-demanding tasks in which the target server can easily block your IP address.

How Does a Proxy Server Help with Web Scraping?

As you know, web scraping is a high-bandwidth task that usually takes a longer time (this varies based on the amount of data you are scraping). When you scrape, your original IP address will be visible to the target server. The function of web scraping is to collect as much data within a fixed amount of requests. When you start performing web scraping, your tool will make a request and send it to the target server. If you make an inhumane number of requests within a short time, the target server may recognize you as a bot and reject your request, ultimately blocking your IP address.

When you use proxy servers, your IP address is masked, which makes it difficult for the target server to check whether you are using a proxy server or not. Rotating proxy servers also helps you make several requests to the target server, which can help you to get more data in a short amount of time.

Which Is the Better Proxy Server for Web Scraping?

ProxyScrape is one of the most popular and reliable proxy providers online. Three proxy services include dedicated datacentre proxy servers, residential proxy servers, and premium proxy servers. So, which is the best proxy server to overcome the challenges of data collection? Before answering that questions, it is best to see the features of each proxy server.

A dedicated datacenter proxy is best suited for high-speed online tasks, such as streaming large amounts of data (in terms of size) from various servers for analysis purposes. It is one of the main reasons organizations choose dedicated proxies for transmitting large amounts of data in a short amount of time.

A dedicated datacenter proxy has several features, such as unlimited bandwidth and concurrent connections, dedicated HTTP proxies for easy communication, and IP authentication for more security. With 99.9% uptime, you can rest assured that the dedicated datacenter will always work during any session. Last but not least, ProxyScrape provides excellent customer service and will help you to resolve your issue within 24-48 business hours.

Next is a residential proxy. Residential is a go-to proxy for every general consumer. The main reason is that the IP address of a residential proxy resembles the IP address provided by ISP. This means getting permission from the target server to access its data will be easier than usual.

The other feature of ProxyScrape’s residential proxy is a rotating feature. A rotating proxy helps you avoid a permanent ban on your account because your residential proxy dynamically changes your IP address, making it difficult for the target server to check whether you are using a proxy or not.

Apart from that, the other features of a residential proxy are: unlimited bandwidth, along with concurrent connection, dedicated HTTP/s proxies, proxies at any time session because of 7 million plus proxies in the proxy pool, username and password authentication for more security, and last but not least, the ability to change the country server. You can select your desired server by appending the country code to the username authentication.

The last one is the premium proxy. Premium proxies are the same as dedicated datacenter proxies. The functionality remains the same. The main difference is accessibility. In premium proxies, the proxy list (the list that contains proxies) is made available to every user on ProxyScrape’s network. That is why premium proxies cost less than dedicated datacenter proxies.So, which is the best proxy server to overcome the challenges of data collection? The answer would be “residential proxy.”

The reason is simple. As said above, the residential proxy is a rotating proxy, meaning that your IP address would be dynamically changed over a period of time which can be helpful to trick the server by sending lot of requests within a small time frame without getting an IP block. Next, the best thing would be to change the proxy server based on the country. You just have to append the country ISO_CODE at the end of the IP authentication or username and password authentication.

FAQs:

FAQs:

1. What are all the challenges involved in data collection?

2. What is web scraping?

3. What is the best proxy for web scraping?

Conclusion:

There are challenges in getting data online, but we can use these challenges as a stepping stone to creating more sophisticated data collection practices. A proxy is a great companion for that. It helps you take a great first step toward better online data collection, and ProxyScrape provides a great residential proxy service for web scraping. This article hopes to give an insight into the challenges of data collection and how proxies can help you overcome those obstacles.