Data Parsing – 3 Key Benefits and Use Cases

Data Parsing technologies are responsible for converting data into a particular data format that supports Data Analysis. Statista predicts Big Data Analytics revenue to amount to 274 billion U.S. dollars by 2022. As Big Data is the major contributor to Data Science, raw data are a huge source for Data Analytics. But this unstructured data

Data Parsing technologies are responsible for converting data into a particular data format that supports Data Analysis. Statista predicts Big Data Analytics revenue to amount to 274 billion U.S. dollars by 2022. As Big Data is the major contributor to Data Science, raw data are a huge source for Data Analytics. But this unstructured data is of no use till they are parsed into a more readable format. This is when Data Parsing comes into the picture. People rely on Data Parsing techniques to comprehend unstructured datasets. This article will elaborate you the data parsing functionalities.

Table of Contents

- What Is Data Parsing?

- Benefits of Data Parsing?

- Types of Data Parsing

- How Does a Data Parser Work?

- Ways of Data Parsing

- Data Parsing Use Cases

- Challenges in Data Parsing

- Proxies in Data Parsing

- Why Choose Proxyscrape Proxies

- Frequently Asked Questions

- Closing Thoughts

What Is Data Parsing?

The Data Parsing process converts data from one data format into other file formats. The extracted data may contain unstructured data, like raw HTML code or other unreadable data. Data parsers convert this raw data into a machine-readable format that simplifies the analysis process.

Scrapers extract data in various formats, which are not easily readable. These unreadable data may be an XML file, HTML document, HTML string, or other unruly formats. The data parsing technique reads the HTML file formats and extracts relevant information from them, that is capable of subjecting to an analysis process.

Benefits of Data Parsing?

People usually refer to data parsing as a key technique to enhance the scraped data. Huge loads of scraped data require a proper data structuring process to extract relevant information from them. Rather than generalizing the data parsing uses as scraping, let us explore them in detail.

Easy to Transform

Data Parsing supports users to transfer loads of data from the main server to client applications or from a source to a destination. As it takes time to transport complex and unstructured data, people prefer converting them into interchangeable data formats, like JavaScript Object Notation (JSON). As JSON is a lightweight data format, that suits data transmission. Data parsing technologies convert raw data into JSON format. Go through this blog to know how to read and parse JSON with Python.

Example – In investment analysis, data scientists will collect customer data from finance and accounting banks to compare and choose the right place to make investments. Here the “customer’s credit history” is depicted in a chart. Instead of sending the chart, string, and images as it is, it is better to convert them to JSON objects so that they are lightweight and consume less memory.

Simplifies Analytics Process

Usually, the data extraction process collects bulk data from various sources and formats. Data analysts can find it hard to handle such unstructured complex data. In this case, the data parsing process converts data into a particular format that is suitable for analytical purposes.

Example The financial data collected from banks or other sources may have some null values or missing values, which may affect the quality of the analysis process. Using the data parsing technique, the users convert the null values by mapping them with suitable values of other databases.

Business Flow Optimization

The data parsing technique can simplify business workflows. Data scientists do not have to bother about the quality of the data, as they are already handled by the data parsing technology. The converted data can directly contribute to deriving business insights.

Example – Consider a Data Analytic solution is analyzing credit reports of the customers to find the suitable business techniques that worked. In this case, converting the credit scores, account type, and duration into a system-friendly format helps them to easily figure out when and where their plans worked. This analysis simplifies the process of developing a workflow to enhance the business.

Types of Data Parsing

Parsers can work on data based on two various methods. Parsing data through parse trees functions either in a top-down approach or a bottom-up approach model. The top-down approach starts from the top elements of the tree and travels down. This method focuses on the larger elements first and then moves toward the smaller ones. The bottom-up approach starts from the most minute pieces, then travels to the larger elements.

Grammar-Driven Data Parsing – Here the parser converts unstructured data into a particular structured format with grammar rules.

Data-Driven Data Parsing – In this type, the parser converts data based on Natural Language Processing (NLP) models, rule-based methods, and semantic equations.

How Does a Data Parser Work?

Data Parser primarily focuses on extracting meaningful and relevant information from a set of unstructured data. The data parser takes complete control of the input of the unruly data and structures them into the correct information with user-defined rules or relevancy factors.

A web scraper who extracts a large set of data brings it from various web pages. This might include the whitespaces, break tags, and data in HTML format as it is. To convert this data into an easily understandable format, a web scraper has to undergo parsing techniques.

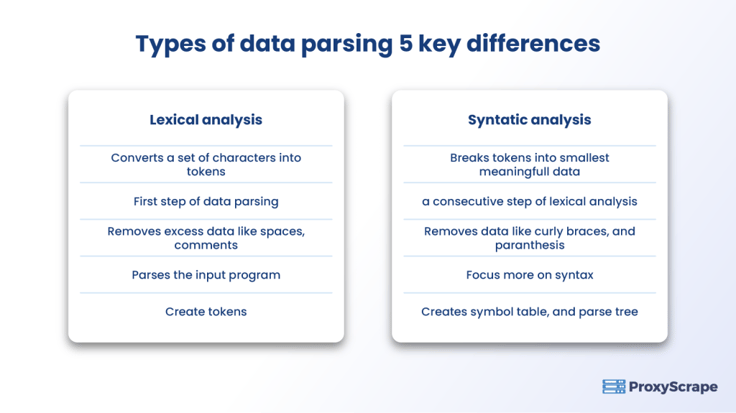

A well-built parser analyzes and parses the input strings to check the formal grammar rules. This parsing process involves two major steps called syntactic analysis and lexical analysis.

| Lexical Analysis | Syntactic Analysis |

|---|---|

| Converts a set of characters into tokens. | Breaks down the tokens into the smallest meaningful data. |

| Is the first step of Data Parsing. | Is a consecutive step of Lexical Analysis. |

| Removes excess data, like white space or comments. | Removes excessive information, like curly braces and parentheses. |

| Parses the input program. | Focuses more on syntax. |

| Creates tokens. | Updates symbol table and creates parse trees. |

Lexical Analysis

The parser creates tokens from the input string data. Tokens are the smaller units of meaningful data. The parser eliminates unnecessary data, like whitespace and comments, from a set of input characters and creates tokens with the smallest and lexical units. Usually, the parser receives data in an HTML document format. Taking this input, the parser looks for the keywords, identifiers, and separators. It removes all the irrelevant information from the HTML code and makes tokens with relevant data.

Example: In an HTML code, the parser starts analyzing from the HTML tag. Then, they route to the head and body tag and further find the keywords and identifiers. The parser creates tokes with lexical keywords by eliminating the comments, space, and tags, like <b> or <p>.

Syntactic Analysis

This step takes the tokens of the Lexical analysis process as input and further parses data. These tokens are put into the syntax analysis, where the parser focuses more on syntax. This step checks for irrelevant data from the tokens, like parenthesis and curly braces to create a parse tree from the expression. This parse tree includes the terms and operators.

Example: Consider a mathematical expression (4*2) + (8+3)-1. Now, this step will split the data according to the syntax flow. Here, the parser considers (4*2), (8+3), and – 1 as three terms of an expression and builds a parse tree. At the end of this syntactic analysis, the parser extracts the semantic analysis components with the most relevant and meaningful data.

Data Parsing – Parse Tree

Ways of Data Parsing

To make use of data parsing technologies, you can either build your own data parser or depend on a third-party data parser. Creating your own data parser is the cheapest choice, as you do not have to spend money on hiring someone. But, the major challenge of using a self-made tool is that you should have programming knowledge or should have a technical programming team for building your own parser.

It is better to get a quality parsing solution that can build your parser per your requirement. This saves the time and effort you put into creating one on your own, but it costs you more. Go through many parsing solutions and find the suitable one that provides quality service at a reasonable cost.

Data Parsing Use Cases

Data users implement data parsing techniques with multiple technologies. Data parsing plays a vital role in many applications, like web development, data analysis, data communications, game development, social media management, web scraping, and database management. Data Parsing can be incorporated with many technologies to improve their quality.

- Data Parsing is used with HTML and other scripting languages to build web apps, game apps, and mobile apps.

- Data parsing techniques are also used along with HTTP and other communication protocols to enhance data communication.

- This technique is also compatible with SQL queries that can help users in the database management systems.

- This process is used with interactive data language to simplify the data analysis process.

- Data parsing also works with modeling languages and parses the NLP data like voice, or emotions to improve the sentiment analysis process.

- Data parsing goes well with most computer and programming languages and promotes the analysis process of multiple domains, like finance and real estate, as well as shipping and logistics businesses.

Challenges in Data Parsing

Out of all the benefits of data parsing, one major challenge is handling dynamic data. As parsing is applied with the scraping and analysis process, they are supposed to handle dynamic changing values. For example, a social media management system has to deal with the likes, comments, and views that keep on changing every single minute.

In this case, the developers have to update and repeat the parser functionalities frequently. This might take some time and so analysts may get stuck with old values. To implement these changes in the parse, people can use proxies that will increase the scraping process and help the parser to adopt the changes quickly. With high-bandwidth proxies of ProxyScrape, the users can repeatedly extract data from the sites to parse and keep them updated.

Proxies in Data Parsing

Proxies can help people to overcome certain challenges. Proxies, with their high-bandwidth, anonymity, and scraping abilty features will simplify the scraping process and help the parser to adopt the changes quickly.

Why Choose Proxyscrape Proxies

Proxyscrape is a popular proxy-providing solution that helps to scrape unlimited data. Here are some of the unique features of their proxies that help them with data parsing.

- High Bandwidth – High-bandwidth proxies speed up the data collection and data transformation process and make it easier to handle dynamic data from multiple sites.

- Uptime – Their 100% uptime ensures the data parsing system functions 24/7.

- Multiple Types – Proxyscrape provides all types of proxies like shared proxies, and private proxies. Shared proxies include data center proxies, residential proxies, and dedicated proxies, while private proxies refer to dedicated proxies. They also offer proxy pools from which scrapers can use different IP addresses for each request.

- Global Proxy – We offer proxies from more than 120 countries. There are also proxies for different protocols, like HTTP proxies and Socks proxies.

- Cost-Efficient – Here, the premium proxies are of reasonable costs and have high bandwidth. Check out our attractive prices and huge proxy options.

Frequently Asked Questions

FAQs:

1. What is Data Parsing?

2. What are the types of Data Parsing?

3. How does Data Parsing help in business analysis?

Closing Thoughts

Data Parsing is becoming a necessary process implemented in all applications. You can use the parsing technique on unruly scraped data to structure them into more readable formats. If you are about to handle statistical data, this can have an impact on the sample face and probability. It is better to undergo the data-driven data parsing method because the data-driven parsing process can effectively handle the impacts of probabilistic models. You can also choose grammar driven data parsing technique to check and parse data with grammar rules. Go through the pricing range of Proxyscrape’s proxies that can enhance the parsing quality and efficiency