News Scraping – 5 Use Cases and Benefits

News scraping solutions benefit business people with highly authentic data. Statistics say that the online newspaper industry generated revenue of 5.33 billion U.S dollars in 2020. News websites are the source of recent and authentic data. Out of all the possible data sources, the data from news articles can contribute high-quality data for the analysis

News scraping solutions benefit business people with highly authentic data. Statistics say that the online newspaper industry generated revenue of 5.33 billion U.S dollars in 2020. News websites are the source of recent and authentic data. Out of all the possible data sources, the data from news articles can contribute high-quality data for the analysis process. This write-up will guide you in scraping data from news articles and let you explore more about their usage

Table of Contents

- What Is Web Scraping

- What Is News Scraping?

- Benefits of News Scraping

- Uses Cases of News Scraping

- How to Scrape News Articles?

- News Scraping with Python

- Challenges of News Scraping

- Proxies in News Scraping

- Why Choose Proxyscrape for News Scraping?

- Frequently Asked Question

- Closing Thoughts

What Is Web Scraping

Web scraping is the process of extracting loads of data from multiple data sources and using them to derive valuable insight. This technique is capable of collecting entire webpage information, including the underlying HTML content of websites. This can easily replicate the website elements in other targets.

Web data from social media, online transactions, customer reviews, business websites, and machines are the most popular data sources that could contribute to data science. Web scraping solutions have to extract data of multiple formats like text, images, binary values, magnetic codes, and sensor data.

What Is News Scraping?

News scraping is an application of web scraping where the scrapers focus on extracting data from news articles. Scraping news websites provide people with data on news headlines, recent releases, and current trends.

Out of all the data sources available online, news websites are the most trustworthy. News articles are highly authentic as they have the least possibility of fake news. Scraping web pages with news articles will let you have access to the latest trends and historical recordings that will benefit analytics to a greater extent.

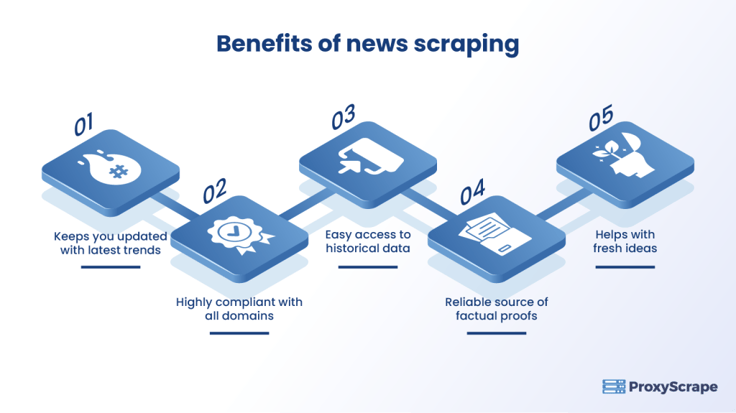

Benefits of News Scraping

News scraping is turning out to be a significant technique to gain insight. Marketing professionals find news scraping helpful in many cases.

Keeps You Updated With Latest Trends

News websites are usually the first to come up with the latest trends in the market. These sources are the right choice for scrapers to keep them updated. An automated news scraping solution enriches the data analysis process with quality and significant data.

Highly Compliant With All Domains

News websites are compliant with almost all possible domains. As the word “news” denotes, they bring in information from all four directions and cover news articles on several topics. This helps scrapers to access information on all fields on one site. News is not just in paper forms. They are also compliant with digital devices and applications.

Easy Access to Historical Data

One necessary element in data analysis is data from previous experiments. The analysts require the techniques involved in previous tasks and their success and failure rates to figure out the worthy strategy. This analysis of existing data can serve as valuable input for future business insight.

Reliable Source of Factual Proofs

People these days are more likely to send fake news to gain popularity. Figuring out the authenticity of the data is quite a complex process. This is why analysts mostly rely on news websites that come up with verified news articles.

Helps With Fresh Ideas

Concerning quality articles, users can come up with fresh ideas to build their business. Business people can design their marketing strategies with recent product launches and upcoming trends.

Uses Cases of News Scraping

News scraping services support people in multiple applications that can help the organization grow in terms of the business market.

Reputation Feedback

Organizations can keep track of the news about their own companies. News articles may come out with audience reviews or surveys that let companies know people’s views on them. This reputation monitoring system helps analysts know if their plans are going well or if it requires any changes.

Risk Analysis

From news articles, people can figure out the market demand, as well as the stuff that will not work. This helps companies to shift their focus from outdated products and let them focus on the current trends.

Competitor Analysis

Pulling data on your competitors can give you a brief idea of their functions and strategies. Analyzing your competitors hit and flop rates are equally as important as analyzing your own. Collecting data from surveys of your niche will let you have an edge over your competitors.

Weather Forecasts

Businesses also depend on external factors, like geographical locations or climate. Business analysts can scrape weather forecasting news articles. These meteorological data can help analysts make decisions on expanding their businesses across countries.

Sentiment Analysis

News scraping is used in sentiment analysis. Analysts scrape public reviews from news sites and subject those data to sentiment analysis. In this analysis, they figure out the emotion of the public by matching the positive and negative words. This helps the business people learn how people react and feel about their product or service.

How to Scrape News Articles?

Business people can scrape data from news articles on their own or get assistance from a third-party scraping solutions company. Manual scraping requires a qualified programmer who can develop a scraping tool with Python or R programs. Python offers some default libraries for collecting information from websites. As scraping is something more than normal data extraction, users should make use of proxies. Proxies let users scrape tons of data without restrictions.

An individual developer may find it hard to handle all these processes. In this case, people can go for standard scraping solutions, which can effectively scrape news data from multiple sites with the help of proxies.

News Scraping with Python

There are a few prerequisites for scraping google news from the SERP results. Python libraries can help users simplify the web scraping process.

- Download Python – Use the compatible version.

- Use command prompt to install python.

- Install Request Library for requesting data.

- Install Pandas for data analysis.

- Install BeautifulSoup and lxml for parsing HTML content.

To install all these use the command prompt to execute the following command.

pip install requests

pip install lxml

pip install beautifulSoup4

Import these libraries before starting

import requests

import pandas

import beautifulSoup, lxml

Getting News Data

Python requests modules let users send HTTP requests. Now import the requests module and then create a response object to get the data from the desired URL. Create a response variable and use the get() method to scrape data from targeted websites like WikiNews.

response = requests.get(https://en.wikipedia.org/wiki/Category:News_websites)

Then print the status of the requests. Seeing the status code, users can find out if the page is successfully downloaded or has any errors. To learn know what each error means, go through the proxy errors page.

Printing the response

Then to print the content of the page, use the following code and print the entire page.

print(response.status_code)

print(response.text)

Parsing the String

After getting and printing the web page content, the next necessary step is parsing. The printed response of the previous step is a string. To perform the necessary scraping operations on the extracted data, users must convert the string to a python object. Check out this page to learn how to read and parse JSON using python.

Python provides multiple libraries, like lxml and beautiful soap, to parse the string.

To use this, create a variable and parse the extracted text with a parsing function named ‘BeautifulSoup’. The ‘response.text’ variable will return the text data from the response.

soup_text = BeautifulSoup(response.text, 'lxml')

Extract Particular Content

The news scrapers may look for certain information from the website. In this case, they use find() that returns the required element.

| Find() | Returns the first instance of the text. |

| Find All() | Return all the appearances. |

Use this find function with the ‘soup_text’ variable to return the required element from the parsed content. Use HTML tags, like ‘title,’ as a variable and the ‘get_text()’ method returns the title content.

title = soup.find('title')

print(title.get_text())

To scrape other details, you can also use attributes like class, and itemprop to extract news data.

Complete Code:

import requests, pandas, beautifulSoup, lxml

response = requests.get("https://en.wikipedia.org/wiki/Category:News_websites">https://en.wikipedia.org/wiki/Category:News_websites)

print(response.text)

soup_text = BeautifulSoup(response.text, 'lxml')

title = soup.find('title')

print(title.get_text())

Challenges of News Scraping

This highly beneficial news aggregation technique of course comes with certain challenges as well. Some of the most common challenges scrapers face are as follows.

Geographical Restrictions

Some geographically restricted sites do not let users extract data from other countries. These geo-blocks can stop scrapers from having global data in their analysis. Example: An International Stock Exchange prediction system requires input from multiple countries. If the developer cannot scrape stock values of other countries, this affects the accuracy of the prediction system.

IP Blocks

When news sites find some IP addresses that are repeatedly requesting data from their sites, they might suspect the identity of the user and stop them from scraping news articles. They can restrict access to that specific IP address by extracting data from news websites.

Low-Speed

Web scraping news articles is a process of repeatedly extracting data from news websites. Pitching a website with back-to-back requests can slow down the processing speed.

Proxies in News Scraping

News scraping is possible without proxies. But, making use of proxies can simplify the scraping process by resolving the challenges. Proxies with their anonymity feature can overcome all the scraping challenges. When proxies use their address to hide the user’s actual identity, they could easily address IP blocks and geo-blocks.

Why Choose Proxyscrape for News Scraping?

We provide a

Proxyscrape provides proxies of multiple types and protocols so that users can choose the proxy of a specific country to bypass the restriction. Their residential proxy pool contains millions of high-bandwidth proxies, so users do not have to compromise the scraping speed. Dedicated proxies will have a unique IP address for each user so that the web servers and ISPs will not easily track the identity of the users. Shared proxies like data center proxies and residential proxies provide proxy pools with different proxy types to unblock the blocked sites with multiple proxies.

High Bandwidth – These proxies are of high bandwidth that makes it easier for scrapers to collect multidimensional data from varied sources.

Uptime – Their 100% uptime ensures uninterrupted scraping functionality that helps users to be on track with the most recent data.

Multiple Types – Proxyscrape provides proxies of multiple types. They furnish shared data center proxies, shared residential proxies, and dedicated proxies. Their residential IP pools enable users to make use of different IP addresses for each request and their private proxies help people to own one unique proxy for themselves. There are also proxies for different protocols, like HTTP proxies and Socks proxies.

Global Proxy – Proxyscrape provides proxies of multiple countries. So that users can use proxies of their desired location to scrape news from the location.

Cost-Efficient – They offer quality premium proxies at affordable prices. Check out our attractive prices and huge proxy options.

Frequently Asked Question

FAQs:

1. What is News Scraping?

2. Is news scraping legal?

3. Name a few python libraries for news scraping?

4. How can proxies support news scraping?

5. Which type of proxy is best suited for news Scraping?

Closing Thoughts

Scraping news websites is a part of web scraping where the scrapers focus on news articles to collect valuable and authentic news data. You can use a python library, like Requests, to send HTTP requests to the server. Still, these libraries may fail to keep up in terms of the scraping speed and quality. In this case, you can use anonymous proxies to access multiple locations and collect a vast amount of data at high speeds.