According to Statista, in 2021, retail eCommerce sales amounted to 4.9 trillion US dollars worldwide. That is a lot of money, and it is predicted that by 2025, it will reach the 7 trillion US dollars mark. As you can guess, with this great revenue potential in e-commerce, the competition is bound to be aggressive.

According to Statista, in 2021, retail eCommerce sales amounted to 4.9 trillion US dollars worldwide. That is a lot of money, and it is predicted that by 2025, it will reach the 7 trillion US dollars mark. As you can guess, with this great revenue potential in e-commerce, the competition is bound to be aggressive.

Therefore, It is necessary to adapt to the latest trends in order to survive and thrive in this ultra-competitive atmosphere. If you are a market player, the first step in this direction is to analyze your competitors. One key component of this analysis is the price. Comparing the prices of products across competitors will help you quote the most competitive price in the market.

Also, if you are an end-user, you will be able to discover the lowest prices for any product. But, the real challenge here is that many e-commerce websites are available online. It is impossible to manually go to each website and check every product’s price. This is where computer coding comes into play. With the help of Python code, we can extract information from the websites. This makes scraping prices from websites a walk in the park.

This article will discuss how to scrape prices from websites from an eCommerce website using Python as an example.

Feel free to check the section that you want to know the most.

Step 1: Installing Necessary Libraries:

Step 4: Looping the Code to Get More Data:

FAQs:

Is Web Scraping Legal?

Before we deal with scraping prices from websites, we must discuss the definition and legal factors behind web scraping.

Web scraping, also known as web data extraction, uses bots to crawl through a target website and collect necessary data. When you hear the term “web scraping,” the first question that may come to mind is whether web scraping is legal or not.

This answer depends on another question: “What will you do with the scraped data?” It is legal to get data from other websites for personal analysis since all the information displayed is for public consumption. But, if the data you use for your own analysis impacts the original owner of the data in any shape or form, it is illegal. But in 2019, a US federal court ruled that web scraping does not violate hacking laws.

In short, it is always better to practice extracting data from websites that do not affect the original owner of the data. Another thing to keep in mind is to scrape only what you need. Scraping tons of data from the website will likely affect the bandwidth or performance of the website. It is important to keep an eye on that factor.

If you are not clear on how to check if the website allows web scraping or not, there are ways to do that:

Check Robot.txt file – This human-readable text file gives information about how much data you can scrape from certain websites. A robot text file helps you identify what to scrape and what not to scrape. Different publishers of the websites follow different formats for their robot files. It is recommended to check the file before performing the scraping process.Sitemap files – A sitemap file is a file that contains necessary information about the webpage, audio, video, and other files about the website. Search engines read this file to crawl the page more efficiently. Size of the website – As mentioned above, crawling tons of data affects the website’s efficiency and scraper efficiency. Keep an eye on the size of the website. NOTE: Here, the website’s size refers to the number of pages available.Check the Terms and Conditions – It is always a good idea to check the Terms and Conditions of the website that you want to crawl. The Terms and Conditions will likely have a section regarding web scraping, including how much data you can scrape and the technology they use in their websites.

How to Scrape Prices from Websites Using Python?

Now, you should have a basic understanding of web scraping and the legal factors behind web scraping. Let us see how we can build a simple web scraper to find the prices of laptops from an eCommerce website. Python language, along with the Jupyter notebook, is used to build the scraper.

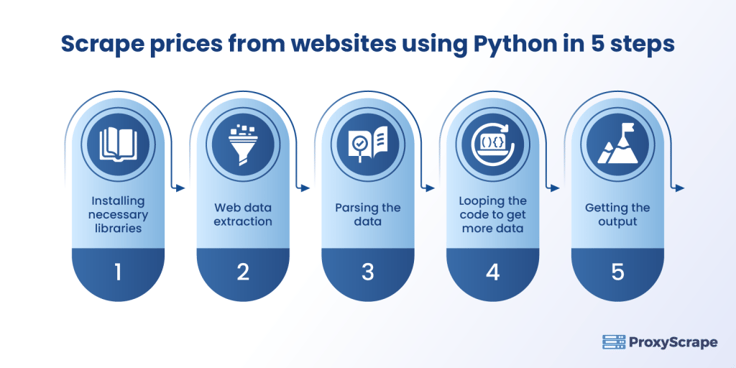

5 Steps to Scrape Prices from Websites using Python

Step 1: Installing Necessary Libraries:

In Python, a library called “BeautifulSoup” collects data from other websites to scrape prices from websites.

Along with the BeautifulSoup, we are using “Pandas” and “requests.” Pandas is used for creating a data frame and performing high-level data analysis, and request is the HTTP library that helps to request the data from the websites. To install said libraries in python, use the following code shown below:

from bs4 import BeautifuSoup

import requests

import pandas as pd

import urllib.parseStep 2: Web Data Extraction:

For better practice, in this example, the website name is not made visible. Following the web scraping legal guidelines mentioned above and the following steps will provide you with the result. Once you obtain the website address, you can save it in a variable and check if the request is accepted or not. For extracting data, follow the python code which is shown below:

seed_url = 'example.com/laptops'

response = requests.get(seed_url) #Checking whether the request is accepted or not

response.status_code #200 is the code refer to OK Status, which means request is accepted

200The status_code will give you the result, whether we got a request or not. Here the status_code ‘200’ means the request is accepted. Now, we got the request. The next step is to parse the data.

Step 3: Parsing the Data:

Parsing is the process of converting one format to another format. In this case, HTML parsing is carried out, converting the data (HTML) into an internal format (python) so the environment can run the data. The following image shows the python code to carry out the process of parsing data using the BeautifulSoup library:

soup = BeautifulSoup(response.content, 'html.parser')By parsing the web pages, python gets all the data like names, tags, prices, image details, and page layout details.

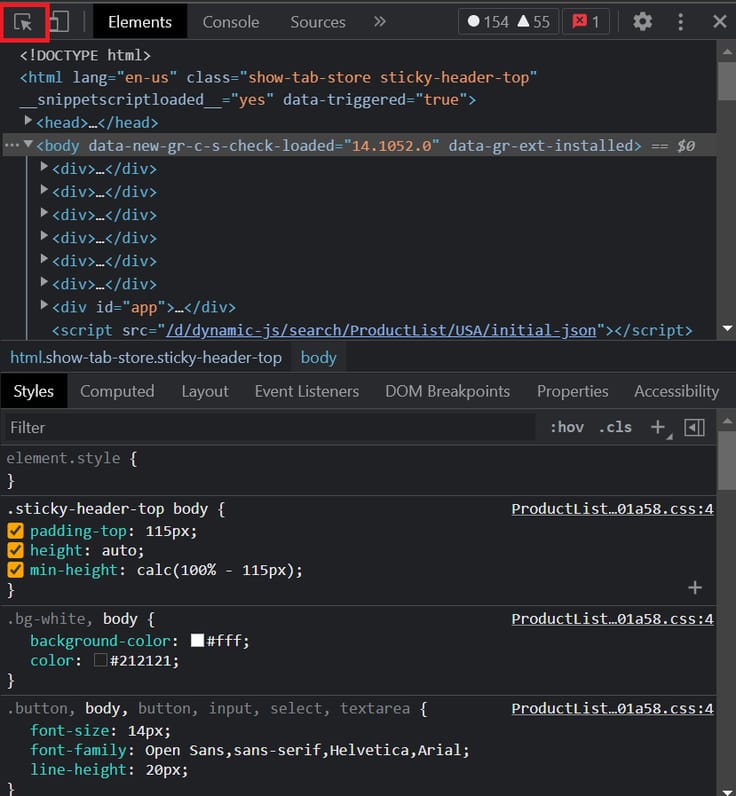

As mentioned above, our objective is to find the prices of the laptop on an e-commerce website. The necessary pieces of information for this example are the name of the laptop and its price. In order to find that, visit the web page you want to scrap. Right-click on the webpage and select the “inspect option.” You will see a terminal like this:

Use the highlighted option to hover over the laptop name, price, and container. If you do that, you can see the div code highlighted in the terminal. From there, you can get the class details. Once you get the class details, input all the information into the python code below.

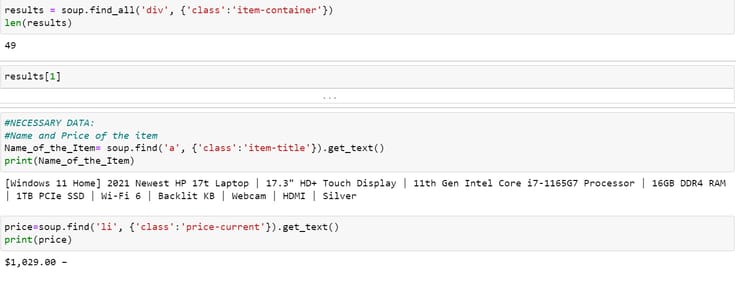

results = soup.find_all('div', {'class':'item-container'})

len(results)

results[1]

#NECESSARY DATA:

#Name and Price of the item

Name_of_the_Item= soup.find('a', {'class':'item-title'}).get_text()

print(Name_of_the_Item)

price=soup.find('li', {'class':'price-current'}).get_text()

print(price)

Step 4: Looping the Code to Get More Data:

Now you’ve got the price for a single laptop. What if you need 10 laptops? It is possible by using the same code in for loop. Python code for executing a for loop is shown below.

Name_of_the_item = []

Price_of_the_item = []

for soup in results:

try:

Name_of_the_item.append(soup.find('a', {'class':'item-title'}).get_text())

except:

Name_of_the_item.append('n/a')

try:

Price_of_the_item.append(soup.find('li', {'class':'price-current'}).get_text())

except:

Price_of_the_item.append('n/a')

print(Name_of_the_item)

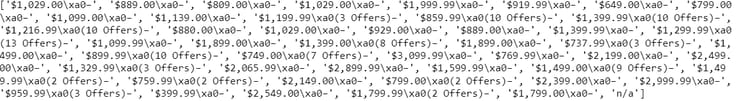

print(Price_of_the_item)Step 5: Getting the Output:

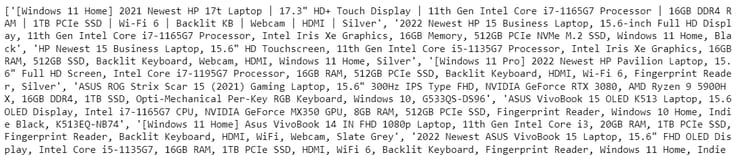

Now that all the steps for web scraping, let us see what the output looks like.

For the name of the laptops:

For the price of the laptops:

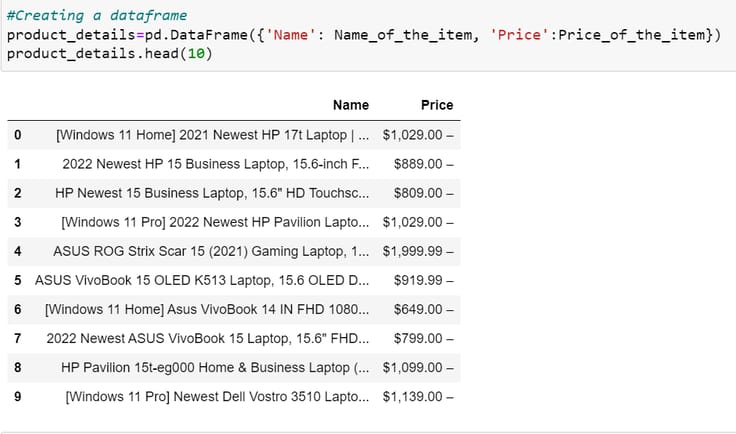

This is not in a readable format. To convert this into a readable format, preferably a table (dataframe) format, you can use pandas library. The python code is shown below on how to carry out this step.

#Creating a dataframe

product_details=pd.DataFrame({'Name': Name_of_the_item, 'Price':Price_of_the_item})

product_details.head(10)

Now it looks readable. The last step is to save this dataframe in a CSV file for analysis. The python code to save the dataframe to CSV format is shown below.

product_details.to_csv("Web-scraping.csv")With this, you can perform simple competitive analysis, focusing on the prices of the products. Instead of doing it manually, automated web scraping using python is an efficient way and saves you a lot of time.

ProxyScrape:

As discussed above on how to check if the website allows web scraping, proxies will help you clear the problem.

Proxies help you to mask your local IP address and can make you anonymous online. Doing so can help you to scrape the data from websites without trouble. ProxyScrape is the best place to get premium proxies, as well as free proxies. The advantages of using ProxyScrape are:

- Hides your identity, ensuring you don’t get blocked.

- It can be used on all operating systems.

- Supports most modern web standards.

- No download limit.

- Helps you to perform web scraping without compromising the scraper efficiency.

- 99% uptime guarantee.

FAQs:

FAQs:

1. What does a web scraper do?

2. Is it legal to perform web scraping to scrape prices from websites?

3. Do proxies help you to perform web scraping?

Conclusion:

In this article, we have seen how to scrape prices from websites by using python. Web scraping is an efficient way to get data online. Most of the Kickstarters are using web scraping to get necessary data by following all the ethical guidelines without spending a lot of time and resources. Dedicated web scraping tools are available online for various information, such as prices and product information. You can visit here to learn more about web scraping tools.

This article hopes to have given enough information to answer the question, “how to scrape prices from websites?” But the reality is that there is no definite way to scrape prices from websites. You can use either dedicated web scraping tools to scrape prices from websites or create your own python scripts to scrape prices from websites. Either way, you can save time and collect much data without difficulty.

Key Takeaways:

- Web scraping is an efficient way to get the data online without spending much time and resources.

- The web scraping process should be carried out by following all the ethical guidelines.

- Python libraries like “BeautifulSoup” are used for web scraping

- Using proxies helps to perform web scraping without interference.

DISCLAIMER: This article is strictly for learning purposes. Without following the proper guidelines, performing web scraping may be considered an illegal activity. This article does not support illegal web scraping in any shape or form.