TCP Proxy As A Reverse Proxy and A Load Balancer

A transport control protocol (TCP) proxy operates at the TCP layer of the Open System Interconnection (OSI) model. The TCP proxy server is an intermediate proxy between the client and the destination server. The client establishes a connection with the TCP proxy server, which in turn establishes a connection with the destination server. The TCP

A transport control protocol (TCP) proxy operates at the TCP layer of the Open System Interconnection (OSI) model. The TCP proxy server is an intermediate proxy between the client and the destination server.

The client establishes a connection with the TCP proxy server, which in turn establishes a connection with the destination server. The TCP proxy server acts as both the server and client to access services that restrict connections based on network address.

Some web pages are accessible only from internal machines, and you receive an access denied error message when you access them from elsewhere. However, you can view this page from a web browser anywhere on the internet by using a proxy on one of the internal machines.

The webserver thinks that it is serving the data to a client on the machine running the proxy. However, the proxy forwards the data out of the network to the actual client.

The proxy server accepts connections from multiple clients and forwards them using multiple connections to the server. The client or the server must read or write data on its connections and should not hang the proxy server by refusing any operation to it.

OSI Model – A Preview

OSI models conceptualize the process of computer networking. It has seven layers:

- Physical layer

- Data Link Layer

- Network Layer

- Transport Layer

- Session Layer

- Presentation Layer

- Application Layer

The transport layer is responsible for transferring data across a network. It makes use of two different protocols, the TCP and the User Datagram Protocol (UDP). The TCP is commonly used for data transfer and this protocol directs how to send the data. It chops the message into segments and then sends them from the source to the destination.

Socket Connection

There is a sender and a receiver transmitting chunks of data in normal circumstances. The sender and the receiver are in communication with different machines at the same time so the proxy establishes a socket connection between a sender and the receiver engaged in a communication.

The socket is a logical connection between both using the IP and port number. The proxy establishes a socket connection at the sender and the receiver side. The socket address, consisting of an IP address and a port number is unique for the communication between the sender and the receiver.

The unique socket address ensures that the data transmission happens parallelly and the packets don’t collide with other each other.

Implementing a Proxy at TCP Layer

A TCP proxy receives incoming traffic and opens an outbound socket through which it takes the incoming traffic to the destination server. It moves data between the client and the server but cannot change any data as it does not understand it.

A proxy at this layer has access to the IP address and port number the receiver tries to connect to on the backend server. If the server uses port number 3306 to listen for requests, then the proxy implements it at this layer and also listens at this port.

The proxy listens to that port and relays the messages to the server. The TCP proxy creates a connection through a socket through a single host: port combination.

Implementing a proxy at the transport layer is lightweight and fast as the layer is responsible only for the transfer of data.

The proxies act as a medium for passing messages back and forth, and cannot read the messages. These proxies help you monitor the network, hide the internal network from the public network, queue connections to prevent server overload, and limit connections. It is the best solution for load balancing services communicating over TCP such as database traffic to MYSQL, and Postgres.

TCP Proxy as Reverse Proxy

A reverse proxy accepts a request from a client, forwards it to a server that can fulfill it, and returns the server’s response to the client. You may deploy a reverse proxy even if there is only one server or application.

The reverse proxy is seen publicly by other users on the network. It is implemented at the edge of the site’s network to accept requests from web browsers and mobile apps.

Implementing a TCP proxy as a reverse proxy has the following advantages:

Security – It increases the security of the network. Malicious clients cannot access the backend servers as they are not visible to the outer network. It cannot access them directly to exploit any vulnerabilities.

Prevent DDOS Attack – The backend servers are protected by the reverse proxy to protect them from distributed denial of service (DDOS).

Regulate Traffic – It can reject traffic from a particular client IP address (blacklist), or limit the number of connections from the client.

Scalability and Flexibility – it is flexible to change the backend configuration because the client can only see the reverse proxy’s address. To balance the load at the server, you may scale up and down the number of servers to match the changing traffic volume.

Web Acceleration – It reduces the time taken to generate a response for the requesting client.

Compression – the TCP proxy responds before returning to the client reducing the amount of bandwidth it takes to transmit the data over the network.

Encryption – The traffic between the client and the server in the network requires encryption. The process of encryption puts an overhead on the client and the server as it is computationally exhaustive. The reverse proxy performs the encryption and the decryption thereby freeing the backend servers to devote themselves to only serving the clients.

Caching – The reverse proxy stores a copy of the request in its local system before serving it to the client. The reverse proxy serves the request from the cache instead of forwarding the request to the server and fetching the same when the client requests it again.

TCP Proxy as a Load balancer

A load balancer is a proxy that manages the traffic when there are multiple servers. It enables the server to run efficiently and scale the server when the traffic is high. The load balancer distributes the traffic among the servers and routes original connections directly from clients to a healthy backend server without interruption.

The TCP proxy uses direct server return to take the responses from healthy backend servers to the clients directly and not to the load balancers. The backend server terminates the secure socket layer (SSL) traffic and not the load balancer.

Session Affinity

The TCP traffic between the client and the server supports session affinity. Session affinity is when the client can send requests to the same backend server as long as it is healthy and capable.

Monitor Servers

The TCP proxy performs health checkups of the backend server by periodically monitoring its readiness. When the backend server cannot handle the traffic, it is an unhealthy node, and the server redirects traffic to other healthy backend servers.

The TCP proxy exhibits the following characteristics when it acts as a load balancer:

Asynchronous Behavior – TCP proxy has an asynchronous behavior which means that if one client suddenly stops reading from the socket to the proxy, other clients must not notice any interruptions of service through the proxy.

Support other Protocols – TCP proxy supports HTTP and also other application layer protocols such as FTP.

Act as a Reverse Proxy – A user may use a TCP proxy as a reverse proxy based on the location of implementation. On the server-side, it regulates the traffic from the client to the user.

Window Scale Option

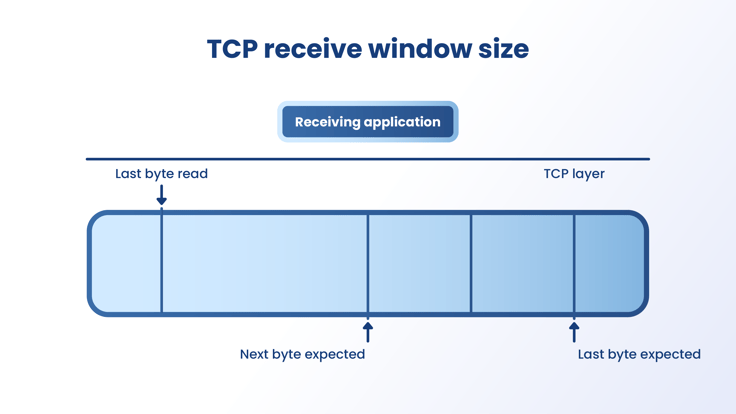

The TCP receive window is the amount of data that a receiver can handle in bytes that it can buffer during a connection. The receiver must update its window size before starting the communication and wait for the acknowledgment.

The sender sends data based on the window size. The Windows TCP/IP stack’s design adjusts to the changing data size and uses a larger window size. Each time the sender transmits, it uses a window size larger than the one used in the previous transmission.

The window size is not fixed because you may resize it by adjusting the maximum segment size (MSS). The client and the server negotiate the MSS during the connection setup. Adjusting the receive window to increments of the MSS increases the percentage of full-sized TCP segments used during bulk data transmissions.

The receive window size is determined in the following manner:

The client sends the first connection request sent to the server by advertising a receive window size of 16K (16,384 bytes).When the connection is established, the client adjusts the receive window size based on the MSS.The window size is adjusted to four times the MSS, to a maximum size of 64 K, unless the window scaling option is used.

Frequently Asked Questions

1. How is a reverse proxy different from a load-balancing proxy?

| Reverse Proxy | Load-balancing Proxy |

|---|---|

| A reverse proxy is an intermediary application that is implemented between the client and the server. | A load-balancing proxy distributes traffic evenly and efficiently across multiple backend servers. |

| Reverse proxies enhance web servers’ security by ensuring that the clients don’t communicate directly with the original server. | There are several backend servers and in the event of a network outage or DDoS attack, a load-balancing proxy helps prevent site shutdowns as traffic can be rerouted to an alternative server. |

| The process: – The user makes an HTTP request. – The reverse proxy receives it. – The reverse proxy either allows or denies the user’s request. – If allowed, the reverse proxy forwards the request to the server. – If denied, the reverse proxy sends an error message to the client. – The server sends the corresponding reply to the reverse proxy.The reverse proxy forwards the server’s response to the client. | The process:– The load balancer receives the client’s request.– The load balancer sends the request to a single server in the group of backend servers.– The selected server sends the response back to the load balancer.– The load balancer forwards the server’s response to the user. |

| Examples of some open-source reverse proxies areNGINXApache HTTP ServerApache Traffic Server | Examples of some load-balancing algorithms areHashRound RobinPower of Two Choices |

2. Difference between an HTTP proxy and a TCP proxy.

| HTTP proxy | TCP proxy |

|---|---|

| In a demilitarized zone (DMZ), it is used as a load balancer or a public IP provider to shield the backend servers. | It is used as a reverse proxy for a TCP connection between the client and the server. |

| Creates an HTTP request/response. | Opens a TCP socket connection and moves data through it. |

| HTTP proxy reads the host address and connects to the appropriate host. | TCP proxy doesn’t change the data as it cannot understand it. |

| Besides HTTP, it can serve HTTPS and FTP requests. | Besides TCP, it can serve HTTP and FTP requests. |

Final Thoughts

A TCP proxy acts as a reverse proxy and also a load balancer. Both types of applications reside between clients and servers, accepting requests from the former and delivering responses from the latter.

Sometimes, the reverse proxy and the load balancer might sound the same and lead to confusion. Exploring when and why you may deploy them at a website will help you understand it.

Data gathering is an immense task and is important for an established business or a startup. It’s a process that requires market trends, competitor analysis, and customer preferences for making decisions.

ProxyScrape offers premium proxies, residential proxies, and dedicated proxies for collecting massive amounts of data from websites. There is a flexible combination of proxies to choose from and the pricing is also affordable. Keep checking our blog for more information on the newly introduced proxies, their uses, and the benefits ProxyScrape offers.