Web Scraping has become insanely popular among IT professionals and even intruders. You might be using the right tools for web scraping. But you cannot overlook the importance of proxies as a middleman between the scraping software and your target website. While there are numerous benefits to using proxies, you need to factor in deciding

Web Scraping has become insanely popular among IT professionals and even intruders. You might be using the right tools for web scraping. But you cannot overlook the importance of proxies as a middleman between the scraping software and your target website. While there are numerous benefits to using proxies, you need to factor in deciding which proxies to use, how to manage your proxies, and which provider to choose for your next web scraping project.

So, we have created this article as an ultimate guide to get you started using proxies for the web.

Why do you need proxies for web scraping?

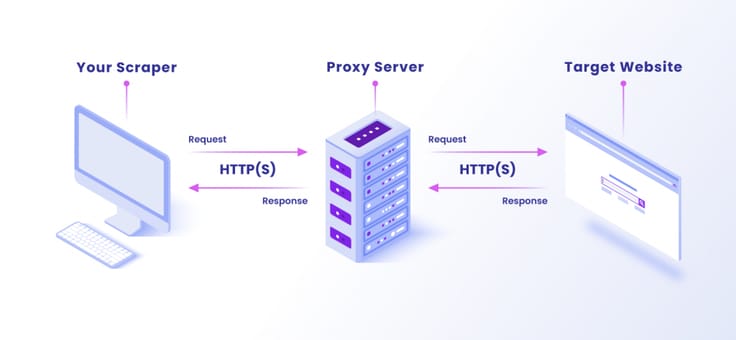

The target website you are scraping the data from can block your IP address when you frequently connect. Hence you can be blacklisted as well. This is where the proxy server comes into play. It not only masks your IP address but also prevents you from getting blacklisted. The basis of requiring proxies for web scraping is primarily made up of 3 components:

Proxies help you to mask your IP address:

When you connect to a target website using your web scraping software via a Proxy server, the proxy will mask your IP address. This process will allow you to carry out all your scraping activities without the source knowing your identity. Thus, it is one of the significant advantages of using a proxy for web scraping.

Proxies help you bypass the limits set by the target source:

Target websites often limit the number of requests that it can receive from a scraper tool in a given amount of time. So, if the target identifies unlimited requests from your IP address, you will be blocked by the target. A typical example of this would be you sending thousands of scraping requests within ten minutes.

As a remedy, the proxy server distributes your requests among several proxies. This way, it would appear to the target source that requests have come from several different users instead of a single user. As a result, the target sites will not alarm its limits.

Allows you to scrape location-specific data

Certain websites limit the data to certain countries or geographic locations. For example, scraping data from a statistical website about market share in the US from a country in Africa or Asia would result in landing on an error page.

However, if you use a US proxy server for scraping, you would deceive the target website, disguising you from the actual location.

Types of Proxies available for Web Scraping

Proxies are available as dedicated, shared, and public. Let’s have a quick comparison of these three types to determine which proxy is ideal for web scraping.

With dedicated proxies, the bandwidth and IP addresses are only used by you. In contrast, with shared proxies, you will be sharing all such resources concurrently with other clients. If the other clients also scrape from the same targets as yours, you will likely get blocked. This is because you may exceed the target’s limits when all of you are using a shared proxy.

On the other hand, public or open proxies freely available pose real dangers and security threats to the users as they are mainly made by people intending to cause malicious acts. In addition to the security risks they pose, they are of low quality. Let’s assume a scenario where tons of people on this planet connected to the same proxy. Hence it would result in lower speed.

So, going by all the comparisons, dedicated proxies are the ideal choice for your web scraping project.

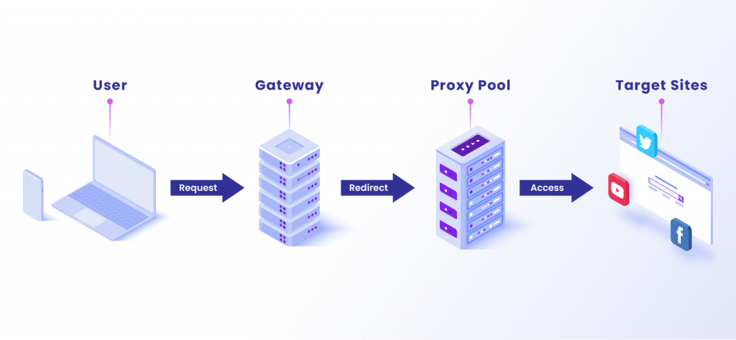

What is a proxy pool and why is it necessary for web scraping?

To sum up, what you have learned previously, using a single proxy for your web scraping activities presents several drawbacks. In addition to the limitations on the number of concurrent requests that you can send to the target device, it also limits the number of available geo-targeting options. Therefore, you will require a pool of proxies that routes your massive volume of requests by delegating traffic to different proxies.

Below are the factors that you need to consider when building your proxy pool:

You need to know the number of requests you can send within a given time frame(e.g., 30 minutes). The larger the number of requests for a specific target website, the larger your proxy pool will need to be. As a result, the target website will not block your requests when compared with using a single proxy.

Similarly, you have to take into account the size of the target website. Larger websites are usually engrained with advanced anti-bot countermeasures. Hence you would require a large proxy pool to combat such advanced techniques.

Next, you have to factor in the type of Proxy IPs and the quality of the proxies. The quality includes whether the proxies you’re using are dedicated, shared, or public. Simultaneously, the type of Proxy IPs considers whether the Proxy IPs are a Datacenter, Residential or Mobile IPS. We will dig deeper into the proxy IPs in the next section.

Finally, you may have a sophisticated pool of proxies. However, it counts for nothing if you are unaware of how to manage such a pool systematically. So you need to be aware and implement several techniques such as proxy rotation, throttling, and session management.

What are your Proxy options for Web scraping

Alongside dedicated, shared, and public proxies, you need to grasp the different Proxy IPs. There are three of those which you shall discover now along with their pros and cons:

Datacenter IPs

From their name, your guess is right. These are the type of proxies housed in data centers across various locations in different parts of the globe. You can quickly build your proxy pool with datacenter IPs to route your requests to the target. Most widely used by web scraping companies at a lower price compared to other alternatives.

Residential IPs

Residential IPs are IPs located at residential homes assigned by Internet Service Providers (ISPs). These IPs are a lot more expensive than datacenter proxies but are less likely to be blocked.

Residential IPs also raise legal concerns since you’re using a person’s private network for web crawling activities.

Aside from the higher price and the sole security concern above, residential proxies are more legitimate. This implies that they are least likely to be blocked by target websites as residential IPs are addressed to real residential addresses. They also offer numerous locations to connect from, thus making them ideal for bypassing any geographical barriers.

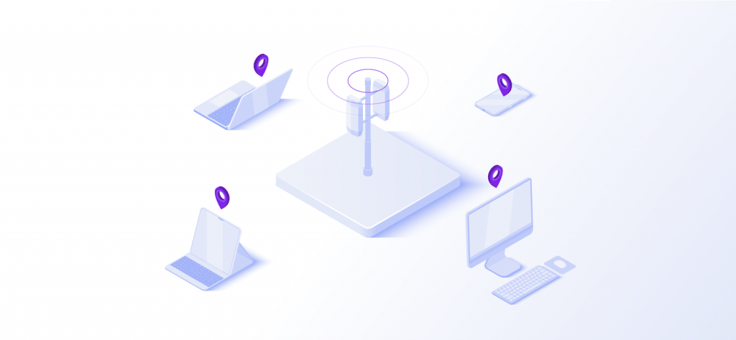

Mobile IPs

Mobile IPs are the IPs assigned to mobile devices maintained by mobile network providers. They, too, are expensive as Residential IPs. They also raise privacy issues as the mobile device owner may not know that you’re using his/her network to crawl the web for scraping activities.

Out of the three Proxy IPs, the Residential IPs are the most suited for web scraping.

Managing your Proxy Pool efficiently for web scraping

Having a proxy pool and routing your requests without any management plan will not lead to any fruitful web scraping results. Instead, it would lead to your proxies being banned and not returning high-quality data.

Some of the challenges that you will have to confront are:

- Identify bans: There will be numerous bans on your proxies, such as captchas, redirects, blocks, and ghost banning. So, detecting them and troubleshooting these bans is the job of the proxies you will be selecting.

- Re-try errors – proxies that you select should re-try the request should they experience timeouts, bans, errors, etc.

- Geographical targeting– When you want to scrape from certain websites in a specific location, you will need to configure your pool to be geographically located in the country of your target.

- Control proxies- Since some targets require that you keep a session with the same proxy, you will need to configure your proxy pool to achieve this.

- User agents– you need to manage user agents to resemble a real user.

- Creating Delays -randomizing delays and applying effective throttling techniques to conceal the fact that you’re scraping.

To overcome these challenges, there are three major solutions for you.

In-house Development – In this scenario, you purchase a pool of dedicated proxies and build a proxy management solution by yourself to overcome any challenges that you will confront. This solution is feasible if you have a highly qualified IT team for web scraping and zero budget to try out any better solution.

In-house Development with Proxy Rotator- With this solution, you will purchase the proxies from a provider who also provides the proxy rotation and geographical targeting. Then, the provider will take care of your primary challenges that you will encounter. However, you will have to handle session management, ban identification logic, throttles, etc.

Complete Outsourced Solution – The final solution would be to outsource your proxy management entirely to a proxy provider that offers proxies, proxy management, and, in specific situations, the web scraping itself. All you have to do is send a request to the provider’s API, which would return the extracted data.

Picking the best proxy solution for your web scraping project

By now, you would have realized that web scraping with the use of proxies is undoubtedly no easy task. You have to factor in the correct type of proxies and reliable decision-making skills to overcome the challenges you just discovered in the last section. Besides, there are also various proxy solutions that you will have to consider. In this section, you will find some of the available solutions to make your final decision easier.

Although there are several factors to consider when deciding on your proxy solution, the two key elements are budget and technical expertise.

Budget

How much are you willing to spend on your proxies? Ideally, the cheapest option would be to manage the proxy pool by yourself after purchasing them from a provider. However, it depends on the technical expertise of your organization. If there is a lack of knowledge, your best bet would be to go for an outsource solution, provided that you have a sufficient budget. An outsource solution would have some adverse effects, which we shall discover a little later.

Technical expertise

Suppose you purchase your proxy pool from a provider for a scraping project of reasonable size and decide to manage it yourself. In that case, you need to make sure that your development team has the right technical skills and the capacity for craving the proxy management logic. Lack of technical expertise would imply that the budget allocated for proxies would end up in waste.

Now in the final section, we will look at the two ultimate solutions:

In-house vs. Outsource solutions.

Purchasing a proxy pool from a provider and managing it by yourself would be an ideal and cost-effective solution. However, to choose this solution, you must have a team of dedicated developers who are willing to learn about managing rotating proxies by themselves. The in-house option would also be suitable if you have a limited budget as you can purchase proxies starting from as low as one dollar.

On the other hand, when using an outsource solution, a proxy provider would provide the entire management solution and even carry out web scraping for you. This method, however, has some negative implications.

Since these providers have a large clientele, your competitors might be their clients. Also, you can’t be sure that they’re scraping the correct data for you or if they are selective on the target websites. Finally, these complete proxy management solutions come with a hefty price where you will lose out on the competition.

How ProxyScrape can help you with your web scraping project.

In addition to providing free proxies, ProxyScrape also offers ample premium datacenter proxies at reasonable prices. With these proxies, you will gain tremendous benefits such as unlimited bandwidth, a large number of proxies ranging up to 44,000, and great proxies that will always work.

Your ideal option would be to purchase datacenter proxies from ProxyScrape and manage the proxy pool with a dedicated team.

Conclusion

As the need for web scraping is on the rise, proxies play an essential role in scraping. As you realized in this article choosing the right type of proxy solution involves a hectic process.

In conclusion, it would help if your organization has a dedicated team of experts, not only having overall technical expertise on proxy management. But also the ability to make critical decisions such as whether to go for in-house or outsource solutions.