Web Scraping for job postings is an alternate shortcut for job seekers to create a database of current job openings. Randstad says that the average job search may last five to six months, from when an application is made until a position is filled. What if you have a solution that can reduce your burden

Web Scraping for job postings is an alternate shortcut for job seekers to create a database of current job openings. Randstad says that the average job search may last five to six months, from when an application is made until a position is filled. What if you have a solution that can reduce your burden of surfing through all job portals and picking the right one that suits you?

This article will take you on a trip explaining how to do web scraping for job postings. So, you are in the job market and trying to find out the best job. But you want to play smarter and not harder. Why not build a web scraper to collect and parse job postings for you. Once you set it, it will provide you with data riches in a nice tidy format, so you don’t have to check it manually again and again. Let’s get started.

- What Is Web Scraping For Job Postings?

- Understanding the URL and Page Structure

- Getting Started with the Scraper Getting Basic Elements of DataGetting Job Title Getting Company NameGetting LocationGetting Salary Getting Job Summary

- Getting Basic Elements of Data

- Getting Job Title

- Getting Company Name

- Getting Location

- Getting Salary

- Getting Job Summary

- Frequently Asked Questions

- Wrapping Up

What Is Web Scraping For Job Postings?

Web scraping for job postings is the solution that automatically collects data from multiple job portals and reduced your time in fetching data from each website. Having such a tool that can provide you with a complete database of job openings will simplify your task by multiple folds. All you have to do is to filter which will suit you and proceed with the application process.

So, you are in the job market and trying to find out the best job. But you want to play smarter and not harder. Why not build a web scraper to collect and parse job postings for you? Once you set it, it will provide you with data riches in a nice tidy format, so you don’t have to check it manually again and again. Let’s get started.

[Disclaimer! Many websites can restrict the scrapping of data from their pages. Users may be subject to legal issues depending on where and how they attempt to extract the information. So one needs to be extremely careful if looking at sites that house their data. For example, Facebook, Linked In, and Craiglist sometimes minds if data is scrapped from their pages. So if you want to scrape, scrape at your own risk].

This will be a very basic article in which we will see the basics of web scraping by extracting some helpful information regarding jobs related to “Data Science” from indeed.com. We will be writing an amazing program that updates the jobs multiple times manually. Some useful libraries that will be very handy while building this scraper are “requests” and “BeautifulSoup.”

Understanding the URL and Page Structure

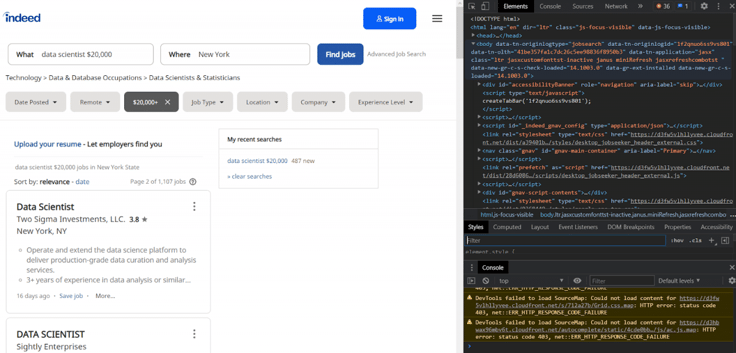

First, let’s have a look at the sample page we are going to extract from indeed.

The way the URL is structured is significant:

- note “q=” begins the string for the “what” field on the page, separating search terms with “+” (i.e., searching for “data+scientist” jobs)

- when specifying salary, it will parse by commas in the salary figure, so the beginning of the salary will be preceded by %24 and then the number before the first comma, it will then be broken by %2C and continue with the rest of the number (i.e., %2420%2C000 = $20,000)

- note “&l=” begins the string for the city of interest, separating search terms with “+” if the city is more than one word (i.e., “New+York.”)

- note “&start=” notes the search result where you want to begin (i.e., start by looking at the 10th result)

This URL structure would be of massive help as we continue building the scraper and gathering data from multiple pages.

Chrome can examine the page’s HTML structure by right-clicking on it and using the inspect element option. A menu will appear on the right, and it will also show the nested elements tags, and when you put your cursor on those elements, it will highlight that portion of the screen.

For this article, I suppose that you know the basics about HTML like tags, divs, etc., but luckily you don’t need to know everything. You just need to understand the page structure and different components hierarchy.

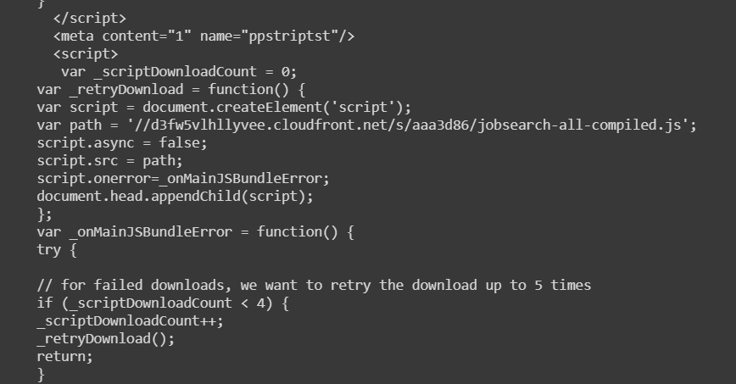

Getting Started with the Scraper

Now we have analyzed the page structure. This will help us build code according to that information to pull out the data of our choice. Let’s start first by importing our libraries. Notice that here we are also importing “time”, which will be helpful not to overwhelm the site’s server when scraping information.

import requests

import bs4

from bs4 import BeautifulSoup

import pandas as pd

import timeWe will first target the single page to withdraw each piece of information we want,

URL = "https://www.indeed.com/jobs?q=data+scientist+%2420%2C000&l=New+York&start=10"

#conducting a request of the stated URL above:

page = requests.get(URL)

#specifying the desired format of "page" using the html parser - this allows python to read the various components of the page, rather than treating it as one long string.

soup = BeautifulSoup(page.text, "html.parser")

#printing soup in a more structured tree format that makes for easier reading

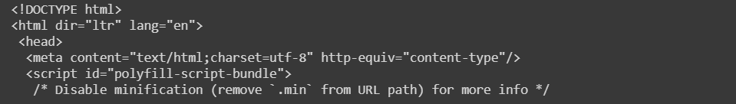

print(soup.prettify())Using prettify makes it easier to have an overview of page’s HTML coding and provides output like this,

Now all the information on our page of interest is in our variable “soup.” We have to dig more into the code to iterate through various tags and sub-tags to capture the required information.

Getting Basic Elements of Data

Five key points for every job posting are,

Job Title.Company Name.Location.Salary.Job Summary.

If we have a look at the page, there are 15 job postings. Therefore our code should also generate 15 different items. However, if the code provides fewer than this, we can refer back to the page and see what’s not being captured.

Getting Job Title

As can be seen, the entirety of each job posting is under <div> tags, with an attribute “class” = “row result.”

Further, we could also see that job titles are under <a> tags, with the attribute “title = (title)”. One can see the value of the tag’s attribute with tag[“attribute”], so I can use it to find each posting’s job title.

If we summarize, the function we are going to see involves the following three steps,

Pulling out all the <div> tags with class including “row”.Identifying <a> tags with attribute “data-tn-element”:”jobTitle”For each of these <a> tags, find attribute values “title”

def extract_job_title_from_result(soup):

jobs = []

for div in soup.find_all(name="div", attrs={"class":"row"}):

for a in div.find_all(name="a", attrs={"data-tn-element":"jobTitle"}):

jobs.append(a["title"])

return(jobs)

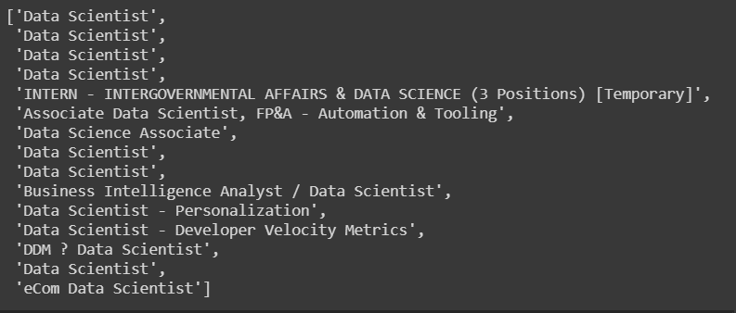

extract_job_title_from_result(soup)This code will yield an output like this,

Getting Company Name

Getting company names can be a bit tricky because most of them are appearing in <span> tags, with “class”:” company”. They are also housed in <span> tags with “class”:” result-link-source”.

We will be using if/else statements to extract the company info from each of these places. In order to remove the white spaces around the company names when they are outputted, we will use inputting.strip() at the end.

def extract_company_from_result(soup):

companies = []

for div in soup.find_all(name="div", attrs={"class":"row"}):

company = div.find_all(name="span", attrs={"class":"company"})

if len(company) > 0:

for b in company:

companies.append(b.text.strip())

else:

sec_try = div.find_all(name="span", attrs={"class":"result-link-source"})

for span in sec_try:

companies.append(span.text.strip())

return(companies)

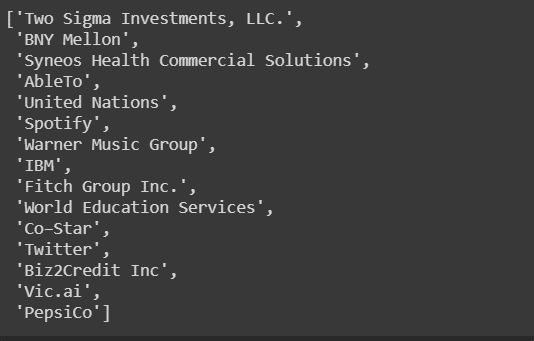

extract_company_from_result(soup)

Getting Location

Locations are located under the <span> tags. Span tags are sometimes nested within each other, such that the location text may sometimes be within “class”:”location” attributes, or nested in “itemprop”:”addressLocality”. However a simple for loop can examine all span tags for text and retrieve the necessary information.

def extract_location_from_result(soup):

locations = []

spans = soup.findAll('span', attrs={'class': 'location'})

for span in spans:

locations.append(span.text)

return(locations)

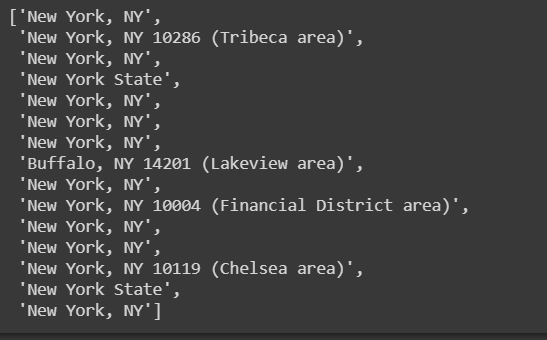

extract_location_from_result(soup)

Getting Salary

Salary is the most challenging part to extract from job postings. Most postings don’t publish salary information at all, while others that do, there can be multiple places to pick that. So we have to write a code that can pick up multiple salaries from multiple places, and if no salary is found, we need to create a placeholder “Nothing Found” value for any jobs that don’t contain salary.

Some salaries are under <nobr> tags, while others are under <div> tags, “class”:”sjcl” and are under separate div tags with no attributes. Try/except statement can be helpful while extracting this information.

def extract_salary_from_result(soup):

salaries = []

for div in soup.find_all(name="div", attrs={"class":"row"}):

try:

salaries.append(div.find('nobr').text)

except:

try:

div_two = div.find(name="div", attrs={"class":"sjcl"})

div_three = div_two.find("div")

salaries.append(div_three.text.strip())

except:

salaries.append("Nothing_found")

return(salaries)

extract_salary_from_result(soup)

Getting Job Summary

The final job is to get the job summary. However, it is not possible to get the job summaries for each particular position because they are not included in the HTML from a given Indeed page. We can get some information about each job from what’s provided. We can use Selenium for this purpose.

But let’s first try this using python. Summaries are located under <span> tags. Span tags are nested within each other such that the location text is within “class”:” location” tags or nested in “itemprop”:” adressLocality”. However, using a simple for loop can examine all span tags for text to retrieve the necessary information.

Frequently Asked Questions

FAQs:

1. Why is it necessary to scrape job details?

2. How can a proxy help in scraping job details?

3. What are the python libraries required to scrape job details?

Related Artices

How To Scrape Twitter Using Python

How To Scrape Instagram Using Python

How To Scrape Reddit Using Python

Wrapping Up

In this article, we have seen what web scraping is and how it can be helpful in our daily lives by taking a practical example of scraping job data from web pages of Indeed. Please note that the results you get might be different from these ones as the pages are dynamic, so the information keeps changing with time.

Web Scraping is an incredible technique if done rightfully and according to your requirements. We have further seen the important five aspects of every job posting and how to extract them. When you try this code on your own, you will have scraped data of job postings, and you don’t need to search for the jobs manually, which is amazing. The same technique can also be applied to other web pages, but their structure might differ. Therefore one needs to optimize their code according to that. But all the basics are covered in this article, so there wouldn’t be any difficulty in scraping other pages as well.

If you are looking for proxy services, don’t forget to look at ProxyScrape residential and premium proxies.