Automate Your Life Through Web Scraping

You all know that knowledge is power. You have to perform some data collection tasks to gain access to the best pieces of information. One of the best methods is web scraping or web data extraction to compile and store information from websites on the Internet. But why do you need to use web scraping

Table of Contents

- How Can Web Scraping Automate Your Life? Performing the Routine TasksEffective Data ManagementBrand MonitoringPrice ComparisonRecruitmentSEO Tracking

- Performing the Routine Tasks

- Effective Data Management

- Brand Monitoring

- Price Comparison

- Recruitment

- SEO Tracking

- Proxies For Web Scraping

- Using Free Proxies

- Conclusion

You all know that knowledge is power. You have to perform some data collection tasks to gain access to the best pieces of information. One of the best methods is web scraping or web data extraction to compile and store information from websites on the Internet. But why do you need to use web scraping if you can perform the same task by copying and pasting data?

The answer to the question is that it is easy to copy the text and save the images. But this approach is practically impossible when extracting voluminous amounts of data from a website. It can take days and even months if you use the copy and paste technique for collecting the data. Hence comes the need for web scraping that is used to extract large amounts of data from websites in an automated manner. It will take only minutes or hours to collect data from thousands of website pages. Further, you can download and export the data to analyze the information conveniently.

How Can Web Scraping Automate Your Life?

Time is the most valuable asset in a person’s life. Using web scraping, you can save your time and scrape the data at a higher volume. Below are some use cases of web scraping that can automate your life.

Performing the Routine Tasks

You can use web scraping to perform daily tasks like:

- Posting on Facebook, Instagram, and other social media platforms

- Ordering food

- Sending emails

- Buying a product of your choice

- Looking for various jobs

How can web scraping perform these tasks? Let us consider an example of a job search. Suppose you are unemployed and looking for a job as a business analyst. Every day you wake up, check Indeed( the most prominent job website), and scroll multiple pages for new jobs. The job searching process through numerous pages can take 20-30 minutes.

You can save time and effort by automating this process. For instance, you can create a web scraping program that can send you an email every day you wake up and has all the details of the business analyst job posting on Indeed in a sorted table. In this way, it will take only a few minutes of yours to see the daily job postings.

Effective Data Management

Rather than copying and pasting data from the Internet, you can accurately collect and effectively manage the data using web scraping. Copying the data from the web and pasting it somewhere on a computer is a manual process that is tedious and time-consuming. You can use the automated process of web data extraction and save it in a structured format like a .csv file, spreadsheet, etc. This way, you can collect data at a higher volume than a normal human being could ever hope to achieve. For more advanced web scraping, you can store your data within a cloud database and run it daily.

Brand Monitoring

The brand of a company holds a significant worth. Every brand wishes to have a positive online sentiment and wants customers to purchase its products instead of its competitors.

The brands use web scraping for:

- Monitoring forums

- Checking reviews on e-commerce websites and social media channels

- Determining the mentions of brand name

They can understand the current voice of their customers by checking their comments on their products on social media platforms. This way, they can determine whether the customers like their products or not. Thus, web scraping allows them to quickly identify the negative comments and mitigate damage to brand awareness.

Price Comparison

If you run a business, you can optimize your existing prices by comparing them with the competitors’ prices. You can automatically do this by web scraping to create a competitive pricing plan. Here the question arises: How does web scraping help create a pricing plan? The answer to the question is that you can collect millions of products’ pricing data via web scraping. The product prices will have to be dynamically changed to meet the fluctuating market demands. This way, the automatic data collection with web scraping helps businesses create a pricing plan.

Recruitment

Web scraping allows you to recruit the top talented candidates for your business compared to your competitors. First, you use web scraping to understand the current market skill, and then you can hire developers that fit your business needs.

SEO Tracking

Search Engine Optimization (SEO) aims to increase website traffic and converts visitors to leads. You can use web scraping to collect volumes of data, get an idea of the keywords they are optimizing and the content they are posting. Once you collect the data, you can analyze and draw valuable inferences to develop the strategies that best suit your niche.

Proxies For Web Scraping

How are proxies important for scraping data from the web? Given below are some reasons to use proxies for safe web data extraction.

- Using a proxy pool can make a higher volume of requests to the target website without being blocked or banned.

- Proxies enable you to make unlimited concurrent connections to the same or different websites.

- You can use proxies to make your request from a specific geographical region. This way, you can see the particular content that the website displays for that given location.

- Proxies allow you to crawl a website reliably so that you can not get blocked.

The proxy pool you use has a specific size that depends on several factors mentioned below.

- The number of requests you make per hour.

- The types of the IPs like datacenter, residential, or mobile that you use as proxies. The datacenter IPs are usually lower in quality than residential and mobile IPs. However, they are more stable than them due to the nature of the network.

- The quality of the public shared or private dedicated proxies

- The target websites, i-e., larger websites, require a large proxy pool as they implement sophisticated anti-bot countermeasures.

Using Free Proxies

Some websites offer a free proxy list to use. You can use the below code to grab the list of free proxies.

First, you have to make some necessary imports. You have to import Python’s requests and the BeautifulSoup module.

import requests

import random

from bs4 import BeautifulSoup as bsYou have to define a function that contains the URL of the website. You can create a soup object and get the HTTP response.

def get_free_proxies():

url = "https://free-proxy-list.net/"

soup = bs(requests.get(url).content, "html.parser")

proxies = []Then, you have to use a for loop that can get the table of the free proxies as shown in the code below.

for row in soup.find("table", attrs={"id": "proxylisttable"}).find_all("tr")[1:]:

tds = row.find_all("td")

try:

ip = tds[0].text.strip()

port = tds[1].text.strip()

host = f"{ip}:{port}"

proxies.append(host)

except IndexError:

continue

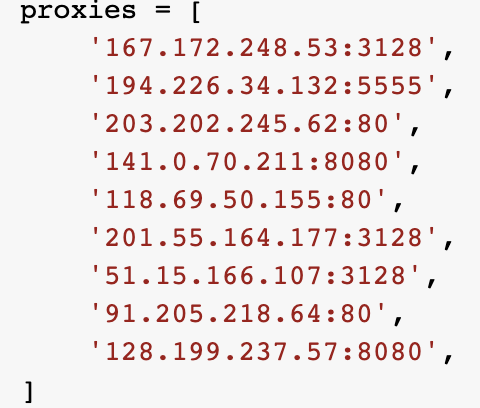

return proxiesThe output below shows some running proxies.

We at ProxyScrape offer a

Conclusion

You can save your time and collect data at higher volumes from a website using the automated web scraping or web data extraction method. It allows you to automate all processes like ordering a product, sending emails, looking for jobs on websites, and saving your shopping time. Manual data extraction processes are tedious and time-consuming. So, you should use automated data collection tools like web scraping tools that can save your time and reduce your effort. You can use web scraping to check the product prices of your competitors, monitor your brand, and automate your tasks. You can use a proxy pool to make many requests to the target website without getting banned. The size of the proxy pool depends on the number of requests you make and the quality of IPs like datacenter or residential IPs.