Data Wrangling in 6 Simple Steps

Data Wrangling is turning out to be a key component of the marketing industry. Statistics say that U.S. revenue on “data processing and related services” will amount to 1,978 billion dollars by 2024. The internet produces millions of data every passing second. Proper usage of these data could highly benefit business people with quality insight.

Data Wrangling is turning out to be a key component of the marketing industry. Statistics say that U.S. revenue on “data processing and related services” will amount to 1,978 billion dollars by 2024. The internet produces millions of data every passing second. Proper usage of these data could highly benefit business people with quality insight. Not all raw data is eligible to undergo the data analysis process. They must undergo some preprocessing steps to meet up desirable formats. This article will let you explore more about one such process called “Data Wrangling.”

Table of Contents

- What Is Data Wrangling?

- Benefits of Data Wrangling

- Data Wrangling and Data Mining

- The Steps of Data Wrangling

- Use Cases of Data Wrangling Process

- Data Wrangling Service by Proxyscrape

- Frequently Asked Questions

- Closing Thoughts

What Is Data Wrangling?

Data Wrangling is the process of transforming raw data into standard formats and making it eligible to undergo the analysis process. This Data Wrangling process is also known as the Data Munging process. Usually, the data scientists will come face with data from multiple data sources. Structuring raw data into a usable format is the first requirement before subjecting them to the analysis phase.

Benefits of Data Wrangling

Data Munging, or the Data Wrangling process, simplifies the job tasks of the data scientists in various ways. Here are some of those benefits.

Quality Analysis

Data analysts may find it easy to work on wrangled data as they are already in the structured format. This will improve the quality and authenticity of the results as the input data are free from errors and noise.

High Usability

Some unusable data that stays for so long turns into data swamps. The Data Wrangling process makes sure all incoming data is turned into usable formats so that they don’t remain unused in data swamps. This increases the data usability to multiple folds.

Removes Risk

Data Wrangling can help the users handle null values and messy data by mapping data from other databases. So the users are risk-free as they are provided with proper data that can help in deriving valuable insights.

Time Efficiency

Data professionals do not have to spend much time dealing with the cleaning and mining process. Data Wrangling supports business users by providing them with suitable data that is ready for analysis.

Clear Targets

Gathering data from multiple sources and integrating them will give business analysts a clear understanding of their target audience. This will let them know where their service works and what the customer demands. With these exact methods, even non-data professionals can find it easy to have a clear idea of their target.

Data Wrangling and Data Mining

Both Data Wrangling and Data Mining work to build valuable business insight from raw data. But, they vary with a few of their functionalities as follows.

| Data Wrangling | Data Mining |

|---|---|

| Subset of Data Mining | Superset of Data Wrangling |

| A Broad set of work that involves data wrangling as a part of it. | A specific set of data transformations that are part of Data Mining. |

| Data Wrangling aggregates and transforms data to qualify them for data analysis. | Data Mining collects, processes, and analyzes the data to find patterns from them. |

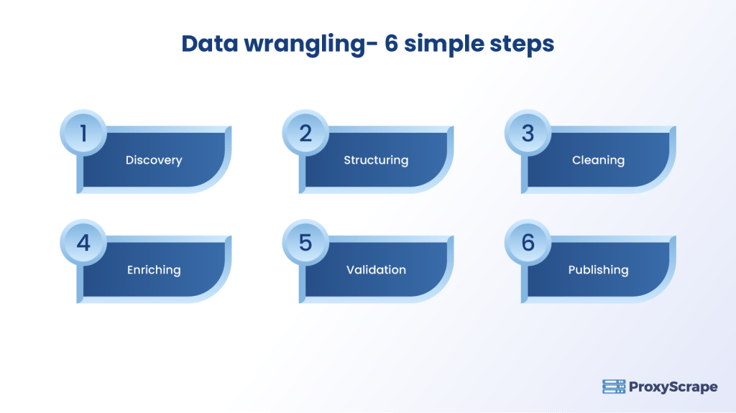

The Steps of Data Wrangling

The Data Wrangling steps comprise 6 necessary and sequential data flow processes. These steps break down the more complex data and map them to a suitable data format.

Discovery

Data discovery is the initial step of the Data Wrangling process. In this step, the data team will understand the data and figure out the suitable approach to handle them. This is the planning stage of other phases. With a proper understanding of the data, data scientists will decide the order of execution, operations to perform, and other necessary processes to enhance data quality.

Example: A data analyst prefers analyzing the visitor counts of a website. In this process, they will go through the visitor’s database and check if there are any missing values or errors to make decisions on the execution model.

Structuring

The unruly data collected from various sources will not have any proper structure. The unstructured data are memory-consuming which will eventually reduce the processing speed. The unstructured data may be data like images, videos, or magnetic code. This structuring phase parses all the data.

Example: The ‘website visitors’ data contains user details, like username, IP address, visitor count, and profile image. In this case, the structuring phase will map the IP addresses with the right location and convert the profile image into the required format.

Cleaning

Data Cleaning works to improve the quality of the data. The raw data may contain errors or bad data that can bring down the quality of the data analysis. Filling null values with zeros or suitable values mapped from another database. Cleaning also involves removing bad data and fixing errors or typos.

Example: The ‘website visitors’ dataset can have some outliers. Consider there is a column that denotes the ‘number of visits from unique users. The data cleaning phase can cluster the values of this column and find the outlier that varies abnormally from other data. With this, marketers can handle outliers and clean the data.

Enriching

This enriching step takes your Data Wrangling process to the next stage. Data Enriching is the process of enhancing the quality by adding other relevant data to the existing data.

Once the data passed the structuring and cleaning phases, enriching the data comes into the picture. Data scientists decide if the need requires any additional input that could help users in the data analysis process.

Example: The ‘website visitors’ database will have visitors’ data. Data scientists may feel some excess input on ‘website performance’ can help the analysis process they will include them as well. Now the visitor count and performance rate will help the analysts to find when and where their plans work.

Validation

Data validation helps users evaluate the data consistency, reliability, security, and quality. This validation process is based on various constraints that are executed through programming codes to ensure the correctness of the processed data.

Example: If the data scientists are collecting information on the visitor’s IP address, they can come up with constraints to decide what kind of values are eligible for this category. That is, the IP address column cannot have string values.

Publishing

Once the data is ready for analytics, the users will organize the wrangled data in a database or datasets. This publishing stage is responsible for delivering quality data to the analysts. The analysis-ready data will then be subject to an analysis and prediction process to build quality business insights.

Use Cases of Data Wrangling Process

Data Streamlining – This Data Wrangling tool continuously cleans and structures incoming raw data. This helps the data analysis process by providing them with current data in a standardized format.

Customer Data Analysis – As Data Wrangling tools collect data from varied sources, they get to know about users and their characteristics with the data collected. Data professionals use Data Science technologies to create a brief study on customer behavior analysis with this wrangled data.

Finance – Finance people will analyze the previous data for developing financial insight for plans. In this case, Data Wrangling helps them with visual data from multiple sources that are readily cleaned and wrangled for analysis.

Unified View of Data – The Data Wrangling process works on the raw data and complex data sets and structures them to create a unified view. This process is responsible for Data Cleaning and Data Mining process through which they improve data usability. This brings together all the raw data usable together into a single table or report making it easy for analysis and visualization.

Data Wrangling Service by Proxyscrape

Proxies supports data management and data analysis with its unique features. While collecting data from multiple sources, users may encounter many possible restrictions, like IP blocks, or geo-restrictions. Proxyscrape furnishes proxies that are capable of bypassing those blocks.

- Using proxy addresses from residential proxy pools can be a wiser choice when collecting data from varied sources. People can use IP addresses from proxy pools, to send each request with a unique IP address.

- The global proxies help them collect data from any part of the world with a suitable IP address. To collect data from a particular country, the proxy will provide you with an IP address of that specific country to remove the geographical restrictions.

- Proxies of Proxyscrape are the highly intuitive user interface. They ensure 100% uptime and so they work around the clock to wrangle the recent data and support data streaming.

- Proxyscrape offers residential proxies, datacenter proxies, and dedicated proxies of all communication protocols. Data wranglers can choose the suitable type as per their requirements.

Frequently Asked Questions

FAQs:

1. What Is Data Wrangling?

2. What are the steps involved in Data Wrangling?

3. How can proxies help Data Wrangling?

4. Is Data Mining different from Data Wrangling?

5. What are the tools required for Data Wrangling?

Closing Thoughts

Data wrangling might sound new to most of the general audience. Data wrangling is a subset of data mining techniques you may use to qualify the raw data for analytical purposes. Proper sequential execution of the mentioned steps will simplify the complexity of data analysis. You can take support from Data Wrangling tools or solutions to automate the process. Proxyscrape, with its anonymity proxies, will ease the Data Wrangling system.