Not many sites can relate when the word “big data” is mentioned. But Twitter can as over 500 million tweets are exchanged on its platform daily, including a huge percentage of images, text, and videos. A single tweet can give you information about: Unlike many other social media platforms, Twitter has a very friendly, expensive

Not many sites can relate when the word “big data” is mentioned. But Twitter can as over 500 million tweets are exchanged on its platform daily, including a huge percentage of images, text, and videos. A single tweet can give you information about:

- Number of people who saw the tweet

- The demographics of people who liked or retweeted the tweet

- Total number of clicks on your profile

Unlike many other social media platforms, Twitter has a very friendly, expensive, and free public API that can be used to access data on its platform. It also provides a streaming API to access live Twitter data. However, the APIs have some limits on the number of requests that you can send within a window period of time. The need for Twitter Scraping comes when you can not access the desired data through APIs. Scraping automates the process of collecting data from Twitter so that you can use it in spreadsheets, reports, applications, and databases.

Before diving into the python code for scraping Twitter data, let’s see why we need to scrape Twitter data.

Feel free to jump to any section to learn how to scrape Twitter using python!

Table of Contents

- Why Do You Need To Scrape Twitter?

- How to Scrape Twitter Using Python Install twitterscraperImport LibrariesMention SpecificationsCreate DataFramePrint the KeysExtract the Relevant Data

- Install twitterscraper

- Import Libraries

- Mention Specifications

- Create DataFrame

- Print the Keys

- Extract the Relevant Data

- Why Use Twitter Proxies?

- Which Is the Best Proxy to Scrape Twitter Using Python?

- FAQs:

- Conclusion

Why Do You Need To Scrape Twitter?

You know that Twitter is a micro-blogging site and an ideal space holding rich information that you can scrape. But do you know why you need to scrape this information?

Given below are some of the reasons for scraping Twitter data that helps researchers:

- Understanding your Twitter network and the influence of your tweets

- Knowing who is mentioned through @usernames

- Examining how information disseminates

- Exploring how trends develop and change over time

- Examining networks and communities

- Knowing the popularity/influence of tweets and people

- Collecting data about tweeters that may include: FriendsFollowersFavoritesProfile pictureSignup date etc.

- Friends

- Followers

- Favorites

- Profile picture

- Signup date etc.

Similarly, Twitter scraping can help marketers in the:

- Effectively monitoring their competitors

- Targeting marketing audience with the relevant tweets

- Performing sentiment analysis

- Monitoring market brands

- Connecting to great market influencers

- Studying customer behaviour

How to Scrape Twitter Using Python

There are many tools available to scrape Twitter data in a structured format. Some of them are:

- Beautiful Soup – It is a Python package that parses HTML and XML documents and is very useful for scraping Twitter.

- Twitter API is a Python wrapper that performs API requests like downloading tweets, searching for users, and much more. You can create a Twitter app for getting OAuth keys and accessing Twitter API.

- Twitter Scraper – You can use Twitter Scraper to scrape Twitter data with keywords or other specifications.

Let’s see how to scrape tweets for a particular topic using Python’s twitterscraper library.

Install twitterscraper

You can install the twitterscraper library using the following command:

!pip install twitterscraperYou can use the below command to install the latest version.

!pip install twitterscraper==1.6.1OR

!pip install twitterscraper --upgradeImport Libraries

You will import three things, i-e.;

get_tweetspandas

from twitter_scraper import get_tweets

import pandas as pdMention Specifications

Let’s suppose we are interested in scraping the following list of hashtags:

- Machine learning

- Deep learning

- NLP

- Computer Vision

- AI

- Tensorflow

- Pytorch

- Datascience

- Data analysis etc.

keywords = ['machinelearning', 'ML', 'deeplearning',

'#artificialintelligence', '#NLP', 'computervision', 'AI',

'tensorflow', 'pytorch', "sklearn", "pandas", "plotly",

"spacy", "fastai", 'datascience', 'dataanalysis'].

Create DataFrame

We run one iteration to understand how to implement the library get_tweets. We pass our first argument or topic as a hashtag of which we want to collect tweets.

tweets = get_tweets("#machinelearning", pages = 5)Here tweet is an object. We have to create a Pandas DataFrame using the code below:

tweets_df = pd.DataFrame()Print the Keys

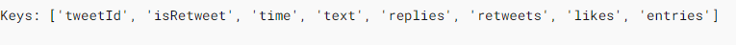

We use the below function to print the keys, and the values obtained.

for tweet in tweets:

print('Keys:', list(tweet.keys()), '\n')

breakThe keys displayed are as:

Extract the Relevant Data

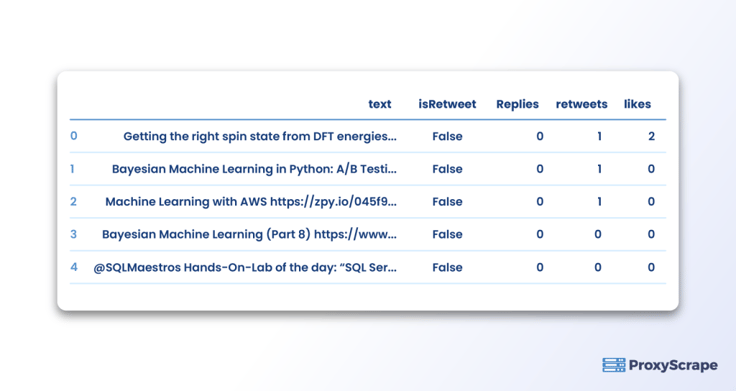

Now, we run the code for one keyword and extract the relevant data. Suppose we want to extract the following data:

- text

- isRetweet

- replies

- retweets

- likes

We can use the for loop to extract this data, and then we can use the head() function to get the first five rows of our data.

for tweet in tweets:

_ = pd.DataFrame({'text' : [tweet['text']],

'isRetweet' : tweet['isRetweet'],

'replies' : tweet['replies'],

'retweets' : tweet['retweets'],

'likes' : tweet['likes']

})

tweets_df = tweets_df.append(_, ignore_index = True)

tweets_df.head()Here’s the dataframe containing our desired data, and you can easily visualize all collected tweets.

Congratulations on scrapping tweets from Twitter. Now, we move on to understand the need for Twitter proxies.

Why Use Twitter Proxies?

Have you ever posted something that you shouldn’t have? Twitter proxies are the best solution for users who can not afford to leave their legion of followers without fresh content for an extended time period. Without them, you’d be out of luck and may lose followers due to a lack of activity. These proxies act on behalf of your computer and hide your IP address from the Twitter servers. So you can access the platform without getting your account blocked.

You also need a proper proxy when you use a scraping tool to scrape Twitter data. For instance, marketers across the world use Twitter automation proxies with scraping tools to scrape Twitter for valuable market information in a fraction of the time.

Residential Proxies – You can use residential proxies that are fast, secure, reliable, and cost-effective. They make for an exceptionally high-quality experience because they are secure and legitimate Internet Service Provider IPs.

Automation tools – You can also use an automation tool when you use a Twitter proxy. These tools help manage multiple accounts because they can handle many tasks simultaneously.

For instance, TwitterAttackPro is a great tool that can handle almost all Twitter duties for you, including:

- Following/unfollowing

- Tweeting/Retweeting

- Replying to a comment

- Favoriting

To use these automation tools, you have to use a Twitter proxy. If you don’t, Twitter will ban all your accounts.

Which Is the Best Proxy to Scrape Twitter Using Python?

ProxyScrape is one of the most popular and reliable proxy providers online. Three proxy services include dedicated datacentre proxy servers, residential proxy servers, and premium proxy servers. So, what is the best possible proxy to scrape Twitter using python? Before answering that questions, it is best to see the features of each proxy server.

A dedicated datacenter proxy is best suited for high-speed online tasks, such as streaming large amounts of data (in terms of size) from various servers for analysis purposes. It is one of the main reasons organizations choose dedicated proxies for transmitting large amounts of data in a short amount of time.

A dedicated datacenter proxy has several features, such as unlimited bandwidth and concurrent connections, dedicated HTTP proxies for easy communication, and IP authentication for more security. With 99.9% uptime, you can rest assured that the dedicated datacenter will always work during any session. Last but not least, ProxyScrape provides excellent customer service and will help you to resolve your issue within 24-48 business hours.

Next is a residential proxy. Residential is a go-to proxy for every general consumer. The main reason is that the IP address of a residential proxy resembles the IP address provided by ISP. This means getting permission from the target server to access its data will be easier than usual.

The other feature of ProxyScrape’s residential proxy is a rotating feature. A rotating proxy helps you avoid a permanent ban on your account because your residential proxy dynamically changes your IP address, making it difficult for the target server to check whether you are using a proxy or not.

Apart from that, the other features of a residential proxy are: unlimited bandwidth, along with concurrent connection, dedicated HTTP/s proxies, proxies at any time session because of 7 million plus proxies in the proxy pool, username and password authentication for more security, and last but not least, the ability to change the country server. You can select your desired server by appending the country code to the username authentication.

The last one is the premium proxy. Premium proxies are the same as dedicated datacenter proxies. The functionality remains the same. The main difference is accessibility. In premium proxies, the proxy list (the list that contains proxies) is made available to every user on ProxyScrape’s network. That is why premium proxies cost less than dedicated datacenter proxies.

So, what is the best possible proxy to scrape Twitter using python? The answer would be “residential proxy.” The reason is simple. As said above, the residential proxy is a rotating proxy, meaning that your IP address would be dynamically changed over a period of time which can be helpful to trick the server by sending a lot of requests within a small time frame without getting an IP block.

Next, the best thing would be to change the proxy server based on the country. You just have to append the country ISO_CODE at the end of the IP authentication or username and password authentication.

FAQs:

1. How to scrape Twitter using python?

2. Is it legal to scrape Twitter?

3. What is the best proxy to scrape Twitter using python?

Conclusion

We discussed that you could scrape Twitter using Twitter APIs and scrapers. You can use a Twitter scraper to scrape Twitter by mentioning the keywords and other specifications, just as we did above. Social media marketers who desire to have more than one Twitter account for a wider reach have to use Twitter proxies to prevent account banning. The best proxies are the residential proxies that are super fast and never get blocked.

I hope you got an idea about how to scrape Twitter using Python.