Have you ever encountered error codes while using proxies while web scraping for instance? Suddenly became frustrated with not knowing the cause of the error and what you should do to resolve it? Then this post is for you, as well as anybody else interested in learning about proxy error codes and how to fix

Have you ever encountered error codes while using proxies while web scraping for instance? Suddenly became frustrated with not knowing the cause of the error and what you should do to resolve it? Then this post is for you, as well as anybody else interested in learning about proxy error codes and how to fix them.

We’d also want to provide you with some helpful hints on preventing proxy error codes entirely.

So, without further ado, let’s get started.

What is a Proxy Error?

In normal circumstances, when your device requests a web page from the destination server, the proxy server relays all the requests back and forth.

However, there are circumstances where the web page is no longer available or moved to a new location. In such cases, the server generates an error message via the proxy server as a response. These error messages are HTTP status codes that you”ll discover in the next section. You “ll also find out how to resolve some of these HTTP status codes to continue using the proxy.

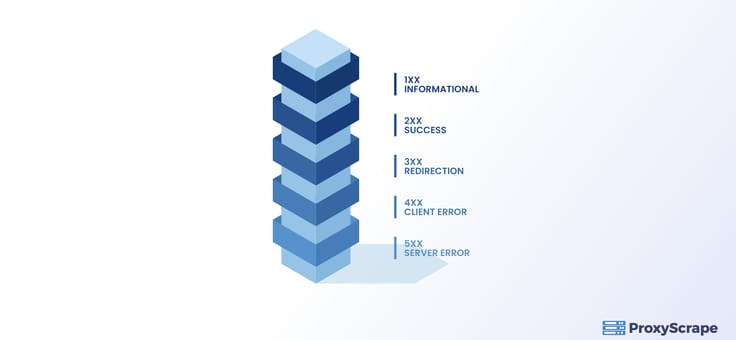

HTTP status codes: As I have described above, you”ll receive an HTTP status code on whether the request has been completed or not. So HTTP status codes are classified into five classes.

1XX Informational Error codes

You don’t utilize these kinds of responses very often. They are temporary replies used by a server to process requests.

100 – Continue

This code indicates that the server has received a portion of the request and that the client may proceed to transmit the remainder of the request. The client provides the “Expect:100 – continue” request header in a typical case, and the server responds with a 100 status code. The “Expect” parameter is included in the initial request to prevent additional requests if the server rejects the first ones.

101 – Switching Protocols

When a browser wants to change the communication protocol during a session, the webserver delivers a 101 status code. When a client browser requests and the server agrees to switch communication protocols, the “100 – Switching Protocols” HTTP status code is returned.

102 -Processing (WebDAV)

Complex requests may take longer than usual for the webserver to process. When a client’s browser makes a WebDAV request that contains numerous sub-requests with complex requirements, the server takes some time to process and finally sends the code “102 – Processing.” This method tries to prevent client-side timeout issues by alerting the client that the server has received and processed the request.

103 -Early Hints

When providing the HTTP status to the browser before processing HTTP requests, the webserver gets the code “103 – Early Hints.” The term implies that this is a forewarning to the client’s browser that the server has not yet begun processing requests.

2XX successful status codes

When you receive an HTTP status code between 200 and 299, it implies that the proxy server has sent your request to the webserver and received the appropriate response. Other than code 200, which informs that webserver has received the request, the other 200 codes that may generate the errors are:

204 – No content

The proxy server delivered the request, but the server did not send a response. Hence this HTTP message is not an error message. Some requests may not need a reply, or the intended destination does not have a response.

Solution: Check your proxy settings and ensure the webserver responds to your request to resolve this issue.

206 – Partial content

You obtain a portion of the requested content if you receive no response with a 204 HTTP error code.

The user must double-check that you have configured the scraper appropriately to receive the desired data stream to address this issue.

3XX Redirection status codes

3xx codes indicate that more client action is required from your end to complete the request.

When using a browser like Google Chrome or Safari, these status codes will not be an issue, but they will when you’re using your scripts for scraping the web. Scripts that you develop will assist you when there is no need to redirect requests to other URLs.

Web browsers typically don’t follow more than five consecutive redirections of the same request since these actions might generate infinite loops.

The following are some of the most frequent 3xx error codes:

302 – Temporary redirect

This error code is displayed to users when their browser temporarily redirects their queries to another website. It simply indicates that the site they would like to visit is unavailable but will be accessible soon.

301 – Permanent redirect

This HTTP error message explains that you can now access the site you requested. However, the URL will be different from the previously accessed URL, which is a permanent occurrence. As a result, you should keep the updated URL in mind for future visits.

4XX Client status codes

This error code class denotes that the obstacle occurred from your end. As a result, you may need to double-check your browser or script for scraping. Since this issue stems from your portion of the scraping tool or browser, it’s a little easier to track down and fix.

400 – Bad Request

It’s a general response indicating that the request you had sent has experienced an issue. Your proxy server or the destination website may be unable to comprehend your request. Likely causes of this issue could be due to contorted syntax, incorrect formatting, or misleading request routing.

401 – Unauthorized

When a user tries to visit a website without providing the required authentication credentials, this type of HTTP error occurs. When the proxy you’re using tries to visit the targeted website but doesn’t have the proper authorization, the proxy server will return the 401 error message.

To overcome a 401 error, you”ll need to log into the website with the proper credentials.

402 – Payment Required

The HTTP 402 Payment Required response code is a non – standard client error status code intended to use in the future.

This code might sometimes imply that the request is not able to complete until the customer pays. Developers originally built it to enable digital cash or (micro) payment systems, and it would signal that the requested material would not be available until the customer pays. However, there is no universally accepted use norm, and various entities apply it to multiple situations.

403 – Forbidden

The proxy or web server understands your request, yet it refuses to respond, indicating a 403 code. When you don’t have the authorization to access a resource, this occurs. As a solution, you need to obtain appropriate permission before accessing the resource.

404 – Not Found

The cause of a 404 error is the unavailability of a resource due to it being deleted or moved to a different location. Although the request you make is valid, the proxy server and the webserver will return the 404 error code.

To prevent this error, you need to confirm the URL.

405 – Forbidden Method

This error usually occurs when you try to access a valid method, but its action is prohibited. For example, invoking a Delete method to delete a resource on a website to which you don’t have permission.

406 – Not Acceptable

The server cannot provide a response that matches the list of acceptable parameters defined in the proactive content negotiation headers of the request. Thus the server is reluctant to provide a default representation.

407 – Proxy Authentication Required

When a proxy server requests authentication, it delivers a 407 status code. Unlike the other issues, you could solve this issue with ease. Please make sure the username and password you provided are accurate by double-checking them. When it comes to IP Authentication, this implies you haven’t whitelisted your device’s IP Address in order to use the proxy. If you’re still having trouble, I recommend contacting your proxy provider.

429 – Too many requests

It’s pretty easy to grasp this error. When users send too many requests in a short period to the target website, this error occurs.

It is the cause of users extracting excessive data by using various bots or scraping programs to scrape heaps of data in a short period.

Users should use high-quality proxies supplied by reputable providers to prevent seeing this error message.

Using a decent set of rotating proxies gets the job done in most scenarios. When the users access their scraping websites with a different IP address, let’s say, every 10 minutes or more, it lowers the chance of you getting banned.

5XX Server Error codes

These server errors usually arise from a fault within the server when processing the request you had sent. For instance, the server is offline, or it crashed while you’re processing the request. On the other hand, there could be a fatal or syntax error in the code or the database server crashed.

So as you can see, these errors are beyond your control. However, having said that, there are several precautions that you could undertake to evict these errors. For instance, you could replace the proxy network, the IP type, and frequently rotate proxies. To rotate proxies, it would be ideal to utilize residential proxies.

Let’s find out the most prominent types of 5XX errors:

500 – Internal Server Error

This error results from an unexpected fault in a server, such as a server crash or the server going offline. A more straightforward remedy to get over this issue would be to reboot your server. However, it may not be successful all the time.

501 – Not Implemented

“Not implemented” error occurs due to the server being unable to provide the resource you had requested. This is most likely because you are using an unrecognized or unauthorized method in your request.

502 – Bad Gateway

This error occurs when a server operates as a gateway or proxy and gets an invalid response from another server. It is pretty common during the data gathering process.

When super proxies refuse to connect to the internet or send requests, bots show 502 code because IPs are unavailable for the selected parameters.

To fix this issue, you need to clear the cache and connect to the website without the proxy server. If the error still occurs, then you should contact your system administrator.

503 – Services Unavailable

This error occurs when a server gets the request while being overloaded by other requests or unavailable for planned maintenance. If you have sufficient privileges, track the progress of the requested server in the case of maintenance.

In web scraping scenarios, this error could occur due to the target website detecting that you’re hiding behind a proxy. Then, as a result, the target web server is banning your proxy. You can altogether avoid it with rotating proxies.

504 –Gateway Timeout

Gateway timeout request emerges when a server acting as a gateway, such as a proxy, does not receive a response from the destination web server. The likely cause could be that the web server might still be processing the request, but the proxy server cannot wait.

The only remedy would be to contact your proxy provider.

Best practices to overcome HTTP error codes

Now you know the scenarios which generate the HTTP error codes. Let’s look at some of the best practices to avoid them in the first place.

- Residential proxies: These proxies provide a large pool of IPs, and hence you could rotate them to avoid destination websites blocking you. ProxySrcape provides high-quality residential proxies, and please visit our page for further information.

- Improve rotation: You could use a proxy management tool to accomplish this task. As a result, it would overcome the requests made with the same IP address.

- Reduce the number of requests: Sending ample requests simultaneously would lead to suspicion by the destination website. You can avoid it by setting a delay between each request.

- Scraper with high performance: When you have a scraper with high performance simultaneously with all the factors mentioned above, the scraper will circumvent the barriers set by websites.

Conclusion

Now you know what the standard type of proxy errors that you’re likely to encounter are. In the first place, it would be ideal to avoid the mistakes to scrape the websites and do other tasks with proxies without any hindrance.

We hope you will follow all the guidelines in this article and put them to the best use.