Web Scraping for News Articles using Python– Best Way In 2026

News is the best way to learn what is happening worldwide. For data engineers, news articles are one of the great ways to collect surreal amounts of data. More data means more insights, and that is the only to innovate our technology and bring our humanity to greater heights than ever before. But there is

News is the best way to learn what is happening worldwide. For data engineers, news articles are one of the great ways to collect surreal amounts of data. More data means more insights, and that is the only to innovate our technology and bring our humanity to greater heights than ever before. But there is a lot of news, and it is humanly impossible to get all the data manually. What is the best way to automatically get the data? The answer is web scraping for news articles using python.

In this article, we will create a web scraper to scrape the latest news articles from different newspapers and store them as text. We will go through the following two steps to have an in-depth analysis of how the whole process is done.

Surface-level introduction to web pages and HTML.Web scraping using Python and the famous library called BeautifulSoup.

Feel free to jump to any sections to learn more on how to perform web scraping for news articles using python

Table of Contents

- Surface-level Introduction to Web Pages and HTML

- Web Scraping news articles Using BeautifulSoup in Python

- Which Is the Best Proxy for Web Scraping for News Articles Using Python?

- FAQs:

- Wrapping Up

Surface-level Introduction to Web Pages and HTML

If we want to withdraw important information from any website or webpage, it is important to know how that website works. When we go to the specific URL using any web browser (Chrome, Firefox, Mozilla, etc.), that web page is a combination of three technologies,

HTML (HyperText Markup Language): HTML defines the content of the webpage. It is the standard markup language for adding content to the website. For example, if you want to add text, images, or any other stuff to your website, HTML helps you do that.

CSS (Cascading Style Sheets): Is used for styling web pages. CSS handles all the visual designs you see on a specific website.

JavaScript: JavaScript is the brain of a webpage. JavaScript handles all the logic handling and web page functionality. Hence it allows making the content and style interactive.

These three programming languages allow us to create and manipulate a webpage’s aspects.

I suppose you know the basics of a web page and HTML for this article. Some HTML concepts like divs, tags, headings, etc., might be very useful while creating this web scraper. You don’t need to know everything but only the basics of the webpage design and how the information is contained in it, and we are good to go.

Web Scraping news articles Using BeautifulSoup in Python

Python has several packages that allow us to scrape information from a webpage. We will continue with BeautifulSoup because it is one of the most famous and easy-to-use Python libraries for web scraping.

BeautifulSoup is best for parsing a URL’s HTML content and accessing it with tags and labels. Therefore it will be convenient to extract certain pieces of text from the website.

With only 3-5 lines of code, we can do the magic and extract any type of text of our website of choice from the internet, which elaborates it is an easy-to-use yet powerful package.

We start from the very basics. To install the library package, type the following command into your Python distribution,

! pip install beautifulsoup4We will also use the ‘requests module’ as it provides BeautifulSoup with any page’s HTML code. To install it, type in the following command to your Python distribution,

! pip install requestsThis requests module will allow us to get the HTML code from the web page and navigate it using the BeautfulSoup package. The two commands that will make our job much easier are

find_all(element tag, attribute): This function takes tag and attributes as its parameters and allows us to locate any HTML element from a webpage. It will identify all the elements of the same type. We can use find() instead to get only the first one.

get_text(): Once we have located a given element, this command allows us to extract the inside text.

To navigate our web page’s HTML code and locate the elements we want to scrape, we can use the ‘inspect element’ option by right-clicking on the page or simply pressing Ctrl+F. It will allow you to see the source code of the webpage.

Once we locate the elements of interest, we will get the HTML code with the requests module, and for extracting those elements, we will use the BeautifulSoup.

For this article, we will carry out with EL Paris English newspaper. We will scrape the news article titles from the front page and then the text.

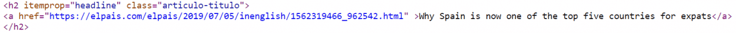

If we inspect the HTML code of the news articles, we will see that the article on the front page has a structure like this,

The title has <h2> element with itemprop=”headline” and class=”articulo-titulo” attributes. It has an href attribute containing the text. So we will now extract the text using the following commands:

import requests

from bs4 import BeautifulSoupOnce we get the HTML content using the requests module, we can save it into the coverpage variable:

# Request

r1 = requests.get(url)

r1.status_code

# We'll save in coverpage the cover page content

coverpage = r1.contentNext, we will define the soup variable,

# Soup creation

soup1 = BeautifulSoup(coverpage, 'html5lib')In the following line of code, we will locate the elements we are looking for,

# News identification

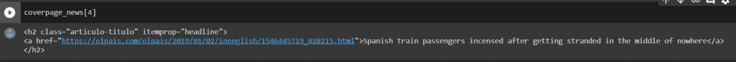

coverpage_news = soup1.find_all('h2', class_='articulo-titulo')Using final_all, we are getting all the occurrences. Therefore it must return a list in which each item is a news article,

To be able to extract the text, we will use the following command:

coverpage_news[4].get_text()If we want to access the value of an attribute (in our case, the link), we can use the following command,

coverpage_news[4]['href']This will allow us to get the link in plain text.

If you have grasped all the concepts up to this point, you can web scrape any content of your own choice.

The next step involves accessing each of the news article’s content with the href attribute, getting the source code to find the paragraphs in the HTML code, and finally getting them with BeautifulSoup. It’s the same process as we described above, but we need to define the tags and attributes that identify the news article content.

The code for the full functionality is given below. I will not explain each line separately as the code is commented on; one can clearly understand it by reading those comments.

number_of_articles = 5# Empty lists for content, links and titles

news_contents = []

list_links = []

list_titles = []

for n in np.arange(0, number_of_articles):

# only news articles (there are also albums and other things)

if "inenglish" not in coverpage_news[n].find('a')['href']:

continue

# Getting the link of the article

link = coverpage_news[n].find('a')['href']

list_links.append(link)

# Getting the title

title = coverpage_news[n].find('a').get_text()

list_titles.append(title)

# Reading the content (it is divided in paragraphs)

article = requests.get(link)

article_content = article.content

soup_article = BeautifulSoup(article_content, 'html5lib')

body = soup_article.find_all('div', class_='articulo-cuerpo')

x = body[0].find_all('p')

# Unifying the paragraphs

list_paragraphs = []

for p in np.arange(0, len(x)):

paragraph = x[p].get_text()

list_paragraphs.append(paragraph)

final_article = " ".join(list_paragraphs)

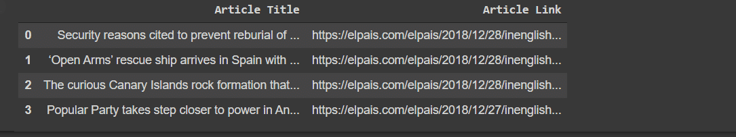

news_contents.append(final_article)Let’s put the extracted articles into the following:

- A dataset that will input the models (df_features).

- A dataset with the title and the link (df_show_info).

# df_features

df_features = pd.DataFrame(

{'Article Content': news_contents

})

# df_show_info

df_show_info = pd.DataFrame(

{'Article Title': list_titles,

'Article Link': list_links})

df_features

df_show_info

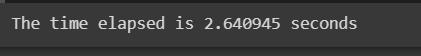

To define a better user experience, we will also measure the time a script takes to get the news. We will define a function for this and then call. Again, I will not explain every line of code as the code is commented. To get a clear understanding, you can read those comments.

def get_news_elpais():

# url definition

url = "https://elpais.com/elpais/inenglish.html"

# Request

r1 = requests.get(url)

r1.status_code

# We'll save in coverpage the cover page content

coverpage = r1.content

# Soup creation

soup1 = BeautifulSoup(coverpage, 'html5lib')

# News identification

coverpage_news = soup1.find_all('h2', class_='articulo-titulo')

len(coverpage_news)

number_of_articles = 5

# Empty lists for content, links and titles

news_contents = []

list_links = []

list_titles = []

for n in np.arange(0, number_of_articles):

# only news articles (there are also albums and other things)

if "inenglish" not in coverpage_news[n].find('a')['href']:

continue

# Getting the link of the article

link = coverpage_news[n].find('a')['href']

list_links.append(link)

# Getting the title

title = coverpage_news[n].find('a').get_text()

list_titles.append(title)

# Reading the content (it is divided in paragraphs)

article = requests.get(link)

article_content = article.content

soup_article = BeautifulSoup(article_content, 'html5lib')

body = soup_article.find_all('div', class_='articulo-cuerpo')

x = body[0].find_all('p')

# Unifying the paragraphs

list_paragraphs = []

for p in np.arange(0, len(x)):

paragraph = x[p].get_text()

list_paragraphs.append(paragraph)

final_article = " ".join(list_paragraphs)

news_contents.append(final_article)

# df_features

df_features = pd.DataFrame(

{'Content': news_contents

})

# df_show_info

df_show_info = pd.DataFrame(

{'Article Title': list_titles,

'Article Link': list_links,

'Newspaper': 'El Pais English'})

return (df_features, df_show_info)

Which Is the Best Proxy for Web Scraping for News Articles Using Python?

ProxyScrape is one of the most popular and reliable proxy providers online. Three proxy services include dedicated datacentre proxy servers, residential proxy servers, and premium proxy servers. So, what is the best possible solution for the best HTTP proxy for web scraping for news articles using python? Before answering that questions, it is best to see the features of each proxy server.

A dedicated datacenter proxy is best suited for high-speed online tasks, such as streaming large amounts of data (in terms of size) from various servers for analysis purposes. It is one of the main reasons organizations choose dedicated proxies for transmitting large amounts of data in a short amount of time.

A dedicated datacenter proxy has several features, such as unlimited bandwidth and concurrent connections, dedicated HTTP proxies for easy communication, and IP authentication for more security. With 99.9% uptime, you can rest assured that the dedicated datacenter will always work during any session. Last but not least, ProxyScrape provides excellent customer service and will help you to resolve your issue within 24-48 business hours.

Next is a residential proxy. Residential is a go-to proxy for every general consumer. The main reason is that the IP address of a residential proxy resembles the IP address provided by ISP. This means getting permission from the target server to access its data will be easier than usual.

The other feature of ProxyScrape’s residential proxy is a rotating feature. A rotating proxy helps you avoid a permanent ban on your account because your residential proxy dynamically changes your IP address, making it difficult for the target server to check whether you are using a proxy or not.

Apart from that, the other features of a residential proxy are: unlimited bandwidth, along with concurrent connection, dedicated HTTP/s proxies, proxies at any time session because of 7 million plus proxies in the proxy pool, username and password authentication for more security, and last but not least, the ability to change the country server. You can select your desired server by appending the country code to the username authentication.

The last one is the premium proxy. Premium proxies are the same as dedicated datacenter proxies. The functionality remains the same. The main difference is accessibility. In premium proxies, the proxy list (the list that contains proxies) is made available to every user on ProxyScrape’s network. That is why premium proxies cost less than dedicated datacenter proxies.

So, what is the best possible solution for the best HTTP proxy for web scraping for news articles using python? The answer would be “residential proxy.” The reason is simple. As said above, the residential proxy is a rotating proxy, meaning that your IP address would be dynamically changed over a period of time which can be helpful to trick the server by sending a lot of requests within a small time frame without getting an IP block.

Next, the best thing would be to change the proxy server based on the country. You just have to append the country ISO_CODE at the end of the IP authentication or username and password authentication.

Suggested Reads:

Scrape YouTube Comments – 5 Simple StepsThe Top 8 Best Python Web Scraping Tools in 2023

FAQs:

1. What is the best way to scrape news articles using python?

2. Is it okay to scrape news articles from the website?

3. How do I scrape Google News using Python?

Wrapping Up

In this article, we have seen the basics of web scraping by understanding the basics of web page flow design and structure. We have also done hands-on experience by extracting data from news articles. Web scraping can do wonders if done rightfully. For example, a fully optimized model can be made based on extracted data that can predict categories and show summaries to the user. The most important thing to do is figure out your requirements and understand the page structure. Python has some very powerful yet easy-to-use libraries for extracting the data of your choice. That has made web scraping very easy and fun.

It is important to note that this code is useful for extracting data from this particular webpage. If we want to do it from any other page, we need to optimize our code according to that page’s structure. But once we know how to identify them, the process is exactly the same.

This article hopes to explain in-depth the practical approach of web scraping for news articles using python. One thing to remember is that proxy is an absolute necessity for web scraping. It helps to prevent any IP blocks from the target server. ProxyScrape provides a great and reliable residential proxy for your web scraping for news articles using python projects.