Web scraping can be defined as the method of collecting and restructuring data from websites. It can also be defined as the programmatic approach of obtaining website data in an automated manner. For instance, you want to extract the email IDs of all people who commented on a Facebook post. You can do this by

Table of Contents

- Web Scraping In Python Import requests:GET request:Extract the Content:Import BeautifulSoup:Create a soup object:Extract Useful Data:Use .text attribute:

- Import requests:

- GET request:

- Extract the Content:

- Import BeautifulSoup:

- Create a soup object:

- Extract Useful Data:

- Use .text attribute:

- Regular Expressions In Python Step 01:Step 02:Step 03:Step 04:

- Step 01:

- Step 02:

- Step 03:

- Step 04:

- Data Visualization In Python

- Why Are Proxies Needed For Web Scraping?

- Using Proxies In Python

- Conclusion

Web scraping can be defined as the method of collecting and restructuring data from websites. It can also be defined as the programmatic approach of obtaining website data in an automated manner. For instance, you want to extract the email IDs of all people who commented on a Facebook post. You can do this by two methods. First, you can point the cursor to any person’s email address string. You can then copy and paste it into a file. This method is known as manual scraping. But what if you want to gather 2000 email IDs? With the help of a web scraping tool, you can extract all email IDs in 30 sec instead of 3 hours if you use manual scraping.

You can use web scraping tools to extract information from websites. You only need to know how to click, and no programming knowledge is required. These tools are resource-efficient and save time and cost. You can scrape millions of pages based on your needs without worrying about the network bandwidths. Some websites implement anti-bots that discourage scrapers from collecting data. But good web scraping tools have in-built features to bypass these tools and deliver a seamless scraping experience.

Web Scraping In Python

Python has excellent tools to scrape the data from the web. For instance, you can import the requests library to retrieve content from a webpage and bs4 (BeautifulSoup) to extract the relevant information. You can follow the steps below to web scrape in Python. We will be extracting information from this website.

Import requests:

You have to import the requests library to fetch the HTML of the website.

import requestsGET request:

You have to make a GET request to the website. You can do this by pasting the URL into the requests.get() function.

r = requests.get('http://www.cleveland.com/metro/index.ssf/2017/12/case_western_reserve_university_president_barbara_snyders_base_salary_and_bonus_pay_tops_among_private_colleges_in_ohio.html')Extract the Content:

Extract the content of the website using r.content. It gives the content of the website in bytes.

c = r.contentImport BeautifulSoup:

You have to import the BeautifulSoup library as it makes it easy to scrape information from web pages.

from bs4 import BeautifulSoupCreate a soup object:

You have to create a BeautifulSoup object from the content and parse it using several methods.

soup = BeautifulSoup(c)

print(soup.get_text())You will get the output (it’s just a part) somewhat like this.

Extract Useful Data:

We have to find the right CSS selectors as we need to extract our desired data. We can find the main content on the webpage using the .find() method of the soup object.

main_content = soup.find('div', attrs = {'class': 'entry-content'})Use .text attribute:

We can retrieve the information as text from the table using the .text attribute of the soup.

content = main_content.find('ul').text

print(content)

We retrieved the text of the table as a string. But the information will be of great use if we extract the specific parts of the text string. To achieve this task, we need to move on to Regular Expressions.

Regular Expressions In Python

Regular expressions (RegEx) are a sequence of patterns that define a search pattern. The basic idea is that:

- Define a pattern that you want to match in a text string.

- Search in the string for returning matches.

Suppose we want to extract the following pieces of info from the text table.

- Salaries

- Names of the colleges

- Names of the presidents

You can extract the three pieces of information by following the steps mentioned below.

Step 01:

Import re and for extracting the salaries, you have to make a salary pattern. Use re.compile() method to compile a regular expression pattern provided as a string into a RegEx pattern object. Further, you can use pattern.findall() to find all the matches and return them as a list of strings. Each string will represent one match.

import re

salary_pattern = re.compile(r'\$.+')

salaries = salary_pattern.findall(content)Step 02:

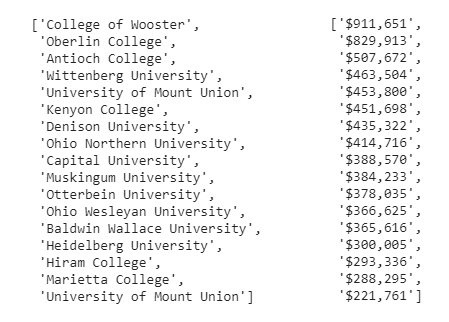

Repeat the same procedure for extracting the names of the colleges. Make a pattern and extract the names.

school_pattern = re.compile(r'(?:,|,\s)([A-Z]{1}.*?)(?:\s\(|:|,)')

schools = school_pattern.findall(content)

print(schools)

print(salaries)

Step 03:

Repeat the same procedure for extracting the names of the presidents. Make a pattern and extract the required names.

name_pattern = re.compile(r'^([A-Z]{1}.+?)(?:,)', flags = re.M)

names = name_pattern.findall(content)

print(names)

Step 04:

The salaries look messy and are not understandable. So, we use Python list comprehension for converting the string salaries into numbers. We will use string slicing, split and join, and list comprehension to achieve the desired results.

salaries = ['$876,001', '$543,903', '$2453,896']

[int(''.join(s[1:].split(','))) for s in salaries]The output is as:

Data Visualization In Python

Data visualization helps you understand the data visually so that the trends, patterns and correlations can be exposed. You can translate a large amount of data into graphs, charts, and other visuals to identify the outliers and gain valuable insights.

We can use matplotlib to visualize the data, as shown below.

Import the necessary libraries as shown below.

import pandas as pd

import matplotlib.pyplot as pltMake a pandas dataframe of schools, names and salaries. For instance, you can convert the schools into a dataframe as:

df_school = pd.DataFrame(schools)

print(df_school)The output is:

Likewise, you can do the same for salaries and names.

For data visualization, we can plot a bar graph as shown below.

df.plot(kind='barh', x = 'President', y = 'salary')The output is as:

Why Are Proxies Needed For Web Scraping?

Web scraping helps businesses extract useful information about market insights and industries to offer data-powered services and make data-driven decisions. Proxies are essential to scrape data from various websites for the following reasons effectively.

- Avoiding IP Bans – To stop the scrapers from making too many requests, the business websites limit the amount of crawlable data termed as Crawl Rate. The crawl rate slows down the website’s speed, and it becomes difficult for the user to access the desired content effectively. However, if you use a sufficient pool of proxies to scrape the data, you will get past the rate limits on the target website. It is because the proxies send requests from different IP addresses, thus allowing you to extract data from websites as per your requirement.

- Enabling Access to Region-Specific Content – Businesses have to monitor their competitors (websites) for providing appropriate product features and prices to the customers in a specific geographical region. They can access all the content available in that region by using residential proxies with IP addresses.

- Enhanced Security – A proxy server adds an additional layer of security by hiding the IP address of the user’s device.

Do you know how many proxies are needed to get the above benefits? You can calculate the required number of proxies by using this formula:

Number of proxies = Number of access requests / Crawl Rate

The number of access requests depends on the following parameters.

- The frequency with which the scraper extracts information from a website

- Number of pages the user wants to scrape

On the other hand, the crawl rate is limited by the number of requests the user makes in a certain amount of time. Some websites permit a limited number of requests per user to differentiate automated and human user requests.

Using Proxies In Python

You can use proxies in Python by following the steps below.

- You have to import the Python’s requests module.

import requests- You can create a pool of proxies to rotate them.

proxy = 'http://114.121.248.251:8080'

url = 'https://ipecho.net/plain'- You can use requests.get() to send a GET request by passing a proxy as a parameter to the URL.

page = requests.get(url,

proxies={"http": proxy, "https": proxy})- You can get the content of the requested URL if there is no connection error.

print(page.text)The output is as:

Conclusion

We discussed that we can use web scraping to extract data from websites instead of using manual scraping. Web scraping is cost-efficient and a time-saving process. Businesses use it to collect and restructure web information for making data-driven decisions and gaining valuable insights. The use of proxies is essential for safe web scraping as it hides the original IP address of the user from the target website. You can use datacenter or residential proxies for web scraping. But prefer using residential proxies as they are fast and can not be easily detected. Further, we can use regular expressions in Python to match or find sets of strings. It means we can extract any string pattern from the text with the help of regular expressions. We also saw that data visualization converts voluminous amounts of data into charts, graphs, and other visuals that help us detect anomalies and identify useful trends in the data.