What Are Bad Bots, How To Detect and Block Them?

Does anything good come to your mind when you hear the word bots or do you know how to detect and block bad bots? I guess all you have heard is about bad bots let alone how to detect and block them. However, not all bots are bad, and there are good ones. This article

Does anything good come to your mind when you hear the word bots or do you know how to detect and block bad bots? I guess all you have heard is about bad bots let alone how to detect and block them.

However, not all bots are bad, and there are good ones. This article is about bad bots, how you can distinguish good from bad bots, and the harm they could cause to your website. Last but not least, you’ll discover how you can prevent the consequences of bad bots.

First, let’s dive into what bots are in non-specialists’ terms.

What are internet bots?

According to a recent report by a cybersecurity firm in Barracuda, two-thirds of the internet traffic (64%) comprises bots. Out of this two-thirds of bots, 40% of internet traffic constitutes bad bots. These statistics are as of September 2021.

Also, this report points out that if we do not enforce strict security measures, these bots will break through defenses and steal data causing poor site performances and data breaches.

Let’s look at some of the everyday tasks that a bot performs.

Typical examples of bots

An everyday example of a bot operation would be search engines such as Google employing bots to crawl to thousands of web pages to extract web content to index them. Then when you search for a phrase in Google, it knows where the desired information is available.

Likewise, transactional bots complete transactions on behalf of humans, and ticketing bots purchase tickets for popular events.

Also, since the development of AI(Artificial Intelligence) and Machine Learning, business intelligence services have used bots to scrape product pages and testimonials from social media profiles to discover how a product is performing.

A significant reason for the preference of bots over humans in some of the above tasks is that they could execute instructions hundreds or thousands of times faster than humans do.

Now let’s find out the difference between good and band bots.

What are the different types of bots?

You can classify bots into good and bad bots, as I have mentioned previously. As with everything else, let’s have a look at the good bots first.

Good bots

We just looked at an example of a good bot, a search engine bot. Similarly, there are other good bots such as:

- Voice engine bots: Like search engine bots, these bots crawl the web to find answers to the queries users search for using voice searches. Alexa’s Crawler and Applebot (Siri) are some familiar voice search bots.

- Social network bots: These bots crawl the websites shared on Facebook and other social media websites to better suggestions, combat spam, and improve the online environment. Some of the typical examples include Facebook Crawler and Pinterest Crawler.

- Copyright bots: These bots search digital content to discover copyright infringements. One prime example is the Youtube Content ID assigned to the network’s copyright owners. Another example would be using these bots on social media, where original content creation is the top priority.

- Marketing bots: The SEO and content marketing software mainly uses these bots to crawl websites for backlinks, determine traffic volume, and search organic and paid keywords. Some of the examples include SEMrush bot and AhrefsBot.

- Data bots: These are the bots that provide instant information about news, weather, and currency exchange rates. Some of the prominent examples are Amazon Echo and Google Home.

- Trader Bots: These bots help you find the best offers or promotions on products that you plan to buy online. Consumers and retails make the best use of trader bots to find better price deals to inch out the competitors.

What are some features of good bots?

As you can see, one of the distinct features of these good bots is that they perform a valuable task for a company or website visitors. Developers who built them do not do so with malicious intentions.

Also, they do not hinder the user experience of a website they crawl. A good bot also respects the rules on the robot.txt file of a website which specifies pages to crawl and not to crawl.

However, on the downside, when bots visit a website, they may consume the server load time and the bandwidth. So, even genuine bots could unwittingly cause damage. Furthermore, an aggressive search engine bot could also take down a site.

Having said all that, you can overcome these negative consequences with proper server configurations.

Now let’s get into what are bad bots.

Bab Bots

Like good bots cause some worthiness to users, software developers develop bad bots to cause harm. Some of the bad bots in the market are:

Bots that inflate page views

Developers develop these bots to send fake traffic to websites to increase page count views to trick page owners into believing that their overall page count has increased. However, in reality, there are no actual users, and it is the bots that are incrementing the page views or likes.

Some of the web services make use of these bots to sell traffic. They’ll claim that they’ll send actual users to your website, and in fact, they end up sending bot traffic.

Some bots watch videos and inflate the number of views on them as well.

Spam bots

Spam bots often visit a web page to carry out spammy tasks. One of the significant examples of a spammy activity is that they automatically interact with online forms, leave comments and submit them by auto-clicking the submit button.

Some competitors of your business, for instance, leave fake product comments to generate negative reviews about a product. Another example of the frequent use of spam bots is in political campaigns.

You may have often noticed that spammy comments even contain URLs and even special characters.

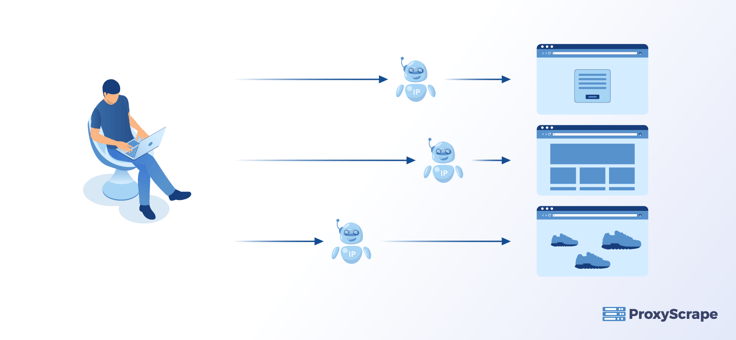

Web Scrapers

Web scrapers are internet bots that facilitate stealing your content. These scraper bots usually visit web pages and extract data without the consent of website administrators.

Although online scraping is not unlawful in the face of the law, as long as the content is openly available, does not need any authentication, and is not subject to copyright laws, authors usually dislike it. Web scrapers may shut down the website if they send too many queries.

Checkout Bot

People mostly employ checkout bots when purchasing limited-edition sneakers to cop the sneakers. Since a user is only allowed to buy a single pair due to the competitive nature of limited-edition, they employ checkout bots to expedite the online checkout process.

This sneaker copping process by bots happens at a higher speed than only humans could dream of. As a result, those who purchase genuinely will have no chance of getting any sneakers as they can’t get anywhere near the speed of bots. You may find this an interesting read: What are Sneaker bots, and how are they used?

Botnets

Botnets are computer networks that a hacker has hijacked to carry out various Cyberattacks.

Hackers develop Botnets to increase their capacity to carry out more extraordinary assaults, such as coordinated DDOS attacks by growing, automating, and speeding up the process. The botnets then utilize your devices to defraud and disturb other people’s devices without your knowledge or approval.

Then these infected devices are called Zoombie devices. For further information about botnets, you may refer to this article.

Accounts takeover bots

These bots usually steal login credentials by employing two of the most common automated methods known as Credential Stuffing and Credential Cracking. The former method uses mass login attempts to verify the validity of a username and password pair that they stole.

In contrast, the latter method includes bot attempting different values for username and password pair. Then the bots ultimately succeed and break into a computer system that could have far-reaching consequences.

Carding and card cracking bots

As you may have guessed right, Credit card fraud can occur on any website that uses a payment processor. Hackers use malicious bots to verify the credit card numbers that they stole by making minor payments known as Carding.

They would also use Card Cracking to identify missing information like expiry dates and CVV numbers.

These threats frequently occur to retail, entertainment, and travel industries.

How to detect bad bots

Bot detection & management necessitates a great deal of investigation and expertise since hackers design bots to be invisible. You need to dig deep to find out if your site has any bot traffic. On the other hand, you need to have minimal false positives(humans are mistaken for bots) and false negatives(when you mistakenly identify humans for bad bots).

Anyhow, here are some indications that bots may have infiltrated your system:

Irregular spike in your traffic

Any site owner will have access to site metrics data. For instance, the Google analytics data. So when you view those metrics, if you realize that there is a drastic increase in traffic from unusual locations, it indicates that bots are interacting with your site.

In such circumstances, you may notice a higher increase in bounce rate. The locations where you get this traffic might originate from places you don’t usually get visitors from.

By Inspecting the Request Header

In most situations, some of the less sophisticated bots do not send all of the headers that a browser usually sends. They, in fact, forget to send the user-agent header.

The sophisticated bots that usually send headers don’t send more than the user-agent string. So if you get requests with little or no titles, it indicates that the bots are potentially assessing your website. In contrast, the browsers send a reasonable number of header information.

Server performance considerably becoming slow

As you have learned above, when bots access your website, they consume most of your website’s resources, including bandwidth. So if you receive heaps of requests from bots within a short timeframe, it implies that your website would be considerably slower.

However, some of the cunning bots act in a way that they get unnoticed and carry out their activities similar to a human. They would send too few requests as a human would do in the process.

So you can’t always rely on the slow down in performance as a metric to measure bot traffic.

High or low session durations

Typically the session duration or the amount of time a user spends on a website is steady. But, a sudden increase in the course of sessions would imply that bots are browsing your website at a lower rate. On the other hand, bots may be clicking through pages on the site faster than a human user, resulting in a spontaneous decline in session time.

Junk conversions and content postings

You may encounter form submissions from unusual email addresses, fake phone numbers, and names.

Other ways that you can easily detect bots are the content that they might be posting. Unlike humans, bots do not have time to craft quality content. So when you start getting vague, dumb comments or posts with embedded URLs, you need to recognize that they aren’t from actual people but rather bots.

How to block bad Bots

As an initial step to combat these bad bots, you must set up your site’s robot.txt file. You can undoubtedly configure this file to control which pages the bot could access and minimize bot interactions with most pages on your website.

Some of the other measures include:

Setting rate limits based on IP address– IP address is a unique identifier of the device assessing your website. So as a web administrator, you could limit the number of requests that an IP address makes to your site in a given period of time.

Blocking suspicious IP addresses – Besides setting the rate limits, your web addresses can block the list of suspicious IP addresses from which the requests originate. You could use a WAF( Web Application Firewall) for this purpose.

Use Captcha services – You may have come across captchas when accessing some websites. When a captcha service detects strange or bot-like behavior, it makes you solve a problem before it grans accessing the site.

Conclusion

We hope you have gained a comprehensive overview of bad bots, how to detect them, and finally get rid of them. Indeed, you’ll need some genuine bots which cause no harm to your system. However, it would help if you evicted bad bots at any cost as the damage they could cause is a lot severe.

We hope you found this article useful, and stay tuned for more articles.