When it comes to the wealth of resources, there is none other than Google, which houses plenty of information on everything life has to offer. According to live internet statistics, nearly 5 billion searches the internet to acquire knowledge for their needs. Thanks to Google bots who crawl other sites and scrape data from them

When it comes to the wealth of resources, there is none other than Google, which houses plenty of information on everything life has to offer. According to live internet statistics, nearly 5 billion searches the internet to acquire knowledge for their needs. Thanks to Google bots who crawl other sites and scrape data from them in order for the information to be available to the users.

Although Google crawls and scrapes other websites, it doesn’t allow the bots to do the same on their sites, and you would have to pay to scrape their sites. However, if you need to scrape free, you must ensure that Google doesn’t block you.

This article will focus on how you could utilize proxies to scrape Google. But first, we’ll dive into the different resources there to scrape from Google.

Feel free to jump to any section to learn more about proxies for scraping Google without getting blocked!

Table of Contents

- What are the entities to scrape in Google?

- What are the barriers that exist when scraping Google sites? IP blocksAccessing geo-restricted contentGoogle CaptchaGetting trapped in a HoneypotAllowing your bot to get into a repetitive crawling pattern

- IP blocks

- Accessing geo-restricted content

- Google Captcha

- Getting trapped in a Honeypot

- Allowing your bot to get into a repetitive crawling pattern

- In What Ways Can You Overcome the Barriers to Google Scraping? What’s in it for you with Google proxies?

- What’s in it for you with Google proxies?

- Best Proxies for Scraping Google Without Getting Blocked:

- Some tips for a better scraping experience

- FAQs:

- Conclusion

What are the entities to scrape in Google?

We all know that Google search plays a vital role in helping users locate information for their insightful queries. But did you know that Google offers some of its other sites or verticals, as they are often called, to search for specific information? Let’s dive into those verticals.

Google Scholars- This insightful search engine of Google enables you to search scholarly articles in whichever subject area you desire. It arranges the pages of articles based on the number of times the other web pages or articles have cited them.

Google places provide locations for local businesses that you search for in Google. However, in order for your business to appear on Google, you must register with Google places which is free. In addition to the location, you can find images, reviews, and other information relevant to the business. So you would be able to scrape all such information.

Patent search-You may use this vertical to search for patents worldwide using topic keywords, names, and other identifiers. Furthermore, you may look for patents in various formats, including ideas and drawings. If you’re working on a brand new product, Google patent provides helpful information to scrape.

Google Images -Google Images is one of the most popular Google categories, allowing you to search for images, vectors, gifs, png, jpeg, and more. It determines if an image is relevant to your search by looking at its context. You may also reverse search and filter the results by size, color, orientation, date, and credentials.

You may scrape these results and retrieve helpful information using a Google Images proxy.

Google Videos- This video service initially started as a streaming service. But later on, it searched for videos across the entire web, including social media. With this vertical, you”ll have all the videos in one place, allowing you to find multiple videos across various streaming services.

Google Trends- This vertical evaluates the popularity of top Google Search queries in different countries and languages. The website uses graphs to compare the number of searches for various search terms over time, and you may use them to compare terms and evaluate trends. So with Google trends, you’ll find excellent sources of data to scrape.

Google Shopping- This is another outstanding vertical where you can scrape heaps of data related to shopping trends. It allows you to search for products on online shopping websites, enabling you to compare prices among different vendors. You can filter out the products based on availability, vendor, and price ranges.

Google Finance- This specialized search engine shows stock quotations and financial news. It allows you to keep track of your own portfolio by searching for specific firms and viewing investing patterns.

Google News- Google News is a news aggregation service that Google has created. It displays a constant stream of links to articles categorized by publisher and magazine. You can access it on Android, iOS, and the web.

Google Flights- Google Flights is an online flight booking search engine that makes buying airline tickets through third-party vendors easier. Following a takeover, Google released it in 2011, which is now an integral part of Google Travel.

Now that you’ve learned about Google sites, you can scrape large quantities of data. So When it comes to scraping large amounts of data from these sites, there are few options, and you have to either pay Google, scrape manually, or scrape using bots.

If you must scrape Google’s sites freely, then the manual options aren’t feasible when considering that you have hundreds of thousands of data. So the only option remains to use a bot.

Then you”ll encounter the challenges we”ll discuss in the next section.

What are the barriers that exist when scraping Google sites?

IP blocks

When you scrape data with a bot, the Google site will block your IP address from any further scraping. This is because when you send multiple requests from the same IP address, the target website will recognize your activity and ban you.

Also, there are time limits on which you can send requests to a target website. When you exceed this limit, it will cause a ban.

Accessing geo-restricted content

You would not extract data such as videos on Google video due to geo-restrictions. Specific video/website owners don’t allow you to view the content unless you’re not from the region/country in which the video/website is hosted. So what you need is to connect to a proxy from a country that streams the video or hosts the content.

Google Captcha

Most websites employ captchas to overcome the bots. Since the bots operate at a superhuman speed compared to human activity on the web, the website in question will be suspicious that it is an activity of a bot. So most websites and Google, in particular, confront you with a Google Captcha.

Interesting read: How to Bypass CAPTCHAs When Web Scraping

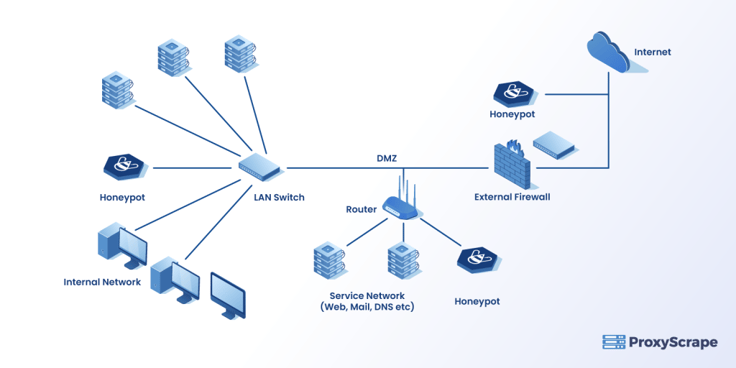

Getting trapped in a Honeypot

Many websites, including Google, use honeypots to trap the bots and prevent them from unauthorized data collection.

Having said that, Google will not stop genuine users from conducting research on their sites for meaningful purposes. However, there are elements called notorious users who try to steal information for fraudulent purposes, and sites employ honeytraps to circumvent such acts.

Web developers usually disguise Honeypot traps that are generally invisible to the naked eye. On the other hand, spiders and web crawlers might come across them in the code. To prevent them, you need to check the site for hidden links and configure your crawler to operate around them. Look for anything that says “display: none” in the CSS code.

Interesting read: What are Honeypots?

Allowing your bot to get into a repetitive crawling pattern

Unless you explicitly define the crawling pattern, a bot usually follows a crawling pattern that is too predictable for the target website. This is because the action of a bot is super fast when you compare it with a speed of a human, and it’s pretty much repetitive.

Humans are far more unpredictable in comparison to bots. Furthermore, Google has implemented sophisticated anti-botting mechanisms that easily identify your bot.

In What Ways Can You Overcome the Barriers to Google Scraping?

To overcome the issues mentioned above, you need proxies compatible with Google, aka Google proxies. Google proxies are proxy servers capable of running through Google applications previously outlined.

When you have a proxy server, it masks your actual IP address and substitutes it with the proxy server’s IP address. In such a way, you should be able to overcome the location restrictions, time outs, and some other benefits as outlined below:

What’s in it for you with Google proxies?

Overcome geo-restrictions: With Google proxies, you can overcome location restrictions by connecting with a proxy server from a location where your target content is hosted.

Monitor the rankings: Google rankings consistently change. This implies that you could be ranking among the top 10 result pages in Google in the morning, and then by the nighttime, you could slip to the 2nd page.

The primary reason for this dropping in rankings is that when you check rankings for specific keyword/s, your personal preferences and the sites you have visited determine that ranking. However, with the use of a Google proxy, you would decide on the actual rankings without any preference bias.

Scrape the data securely: Google or the target website only sees the IP address of the proxy server. It helps you be anonymous online while scraping the data with the bot.

To scrape Google SERPs: You would be able to scrape Google’s SERPs for a particular keyword, and it would help you monitor where your competitors rank for specific keywords. In addition, some users extract keyword ideas from SERPs and search expired domains.

Likewise, there is much information that you could search by scraping SERPs.

Save time by using Google to collect data: Using Google proxies to scrape data allows you to automate the process with digital bots. The bots gather all of the information you want and elegantly organize it.

Best Proxies for Scraping Google Without Getting Blocked:

ProxyScrape is one of the most popular and reliable proxy providers online. Three proxy services include dedicated datacentre proxy servers, residential proxy servers, and premium proxy servers. So, what are the best proxies for scraping Google? Before answering that questions, it is best to see the features of each proxy server.

A dedicated datacenter proxy is best suited for high-speed online tasks, such as streaming large amounts of data (in terms of size) from various servers for analysis purposes. It is one of the main reasons organizations choose dedicated proxies for transmitting large amounts of data in a short amount of time.

A dedicated datacenter proxy has several features, such as unlimited bandwidth and concurrent connections, dedicated HTTP proxies for easy communication, and IP authentication for more security. With 99.9% uptime, you can rest assured that the dedicated datacenter will always work during any session. Last but not least, ProxyScrape provides excellent customer service and will help you to resolve your issue within 24-48 business hours.

Next is a residential proxy. Residential is a go-to proxy for every general consumer. The main reason is that the IP address of a residential proxy resembles the IP address provided by ISP. This means getting permission from the target server to access its data will be easier than usual.

The other feature of ProxyScrape’s residential proxy is a rotating feature. A rotating proxy helps you avoid a permanent ban on your account because your residential proxy dynamically changes your IP address, making it difficult for the target server to check whether you are using a proxy or not.

Apart from that, the other features of a residential proxy are: unlimited bandwidth, along with concurrent connection, dedicated HTTP/s proxies, proxies at any time session because of 7 million plus proxies in the proxy pool, username and password authentication for more security, and last but not least, the ability to change the country server. You can select your desired server by appending the country code to the username authentication.

The last one is the premium proxy. Premium proxies are the same as dedicated datacenter proxies. The functionality remains the same. The main difference is accessibility. In premium proxies, the proxy list (the list that contains proxies) is made available to every user on ProxyScrape’s network. That is why premium proxies cost less than dedicated datacenter proxies.

So, what are the best proxies for scraping Google? The answer would be “residential proxy.” The reason is simple. As said above, the residential proxy is a rotating proxy, meaning that your IP address would be dynamically changed over a period of time which can be helpful to trick the server by sending a lot of requests within a small time frame without getting an IP block.

Next, the best thing would be to change the proxy server based on the country. You just have to append the country ISO_CODE at the end of the IP authentication or username and password authentication.

Some tips for a better scraping experience

Never use free proxies.

Free proxies do not provide sufficient security and anonymity to your connection as they’re open to anyone. Furthermore, several users could share the IP address of the shared proxy. So the target websites block them very often.

Set the rate limit on the proxy

In order to make sure that Google becomes less suspicious about you, you need to set up the proxies to have various rate limits. As a good practice, you must set each unique proxy to be used at every three to five seconds. This would ensure to Google that it is a human sending all the requests and not a bot.

Be wary of captchas

As discussed earlier, various malicious actors try to steal data and launch Cyberattacks of large-scale magnitude. So to be fair enough, Google employs captchas to prevent attacks of such a large scale.

When you use Google proxies and do not intend to cause any harm, you”ll be on the safe side. Google will not immediately ban you if they find out you’re using a Google proxy. Instead, Google would present you with a captcha to prove you are a human.

However, if it fails, you’re at risk of Google banning you. To overcome bans, you must rotate user agents using headless browsers with rotating IPs so that Google becomes the least suspicious.

Suggested Reads:

The Top 8 Best Python Web Scraping Tools In 2023How To Scrape Instagram Using Python

FAQs:

1. What is a proxy for scraping Google?

2. Which are the best proxies for scraping Google?

3. What is the use of a Google scraping proxy?

Conclusion

We hope you understand the importance of scraping Google, which can provide you with a wealth of information to expand your business or any other activity.

Scraping Google’s massive data is by no means a simple task as you need to factor in many factors, which we have stated in the article.

However, if you do succeed, you’ll be a winner. This article hopes to give enough information on proxies for scraping Google without getting blocked.