HTML selectors are key to web scraping, allowing developers to target specific elements on a webpage. By using these selectors, developers can extract data precisely.

Web scraping involves getting data from websites by navigating their HTML structure. HTML selectors are crucial, letting you pinpoint specific tags, attributes, or content. Whether extracting product prices or headlines, selectors are your guide.

Using HTML selectors effectively streamlines data extraction and reduces errors. They help you focus on important elements, saving time and effort in gathering insights from online sources.

In this blog, we will explore how to use the selectors below with Python and "Beautifulsoup" library:

- Id Selectors

- Class Selectors

- Attribute Selectors

- Hierarchical Selectors

- Combination of these selectors together

ID Selectors

In HTML, IDs are unique identifiers assigned to specific elements, ensuring no two elements share the same ID. This uniqueness makes ID selectors ideal for targeting singular elements on a webpage. For instance, if you're scraping a webpage with multiple sections, each section may have its own ID, allowing you to extract data from a particular section without interference.

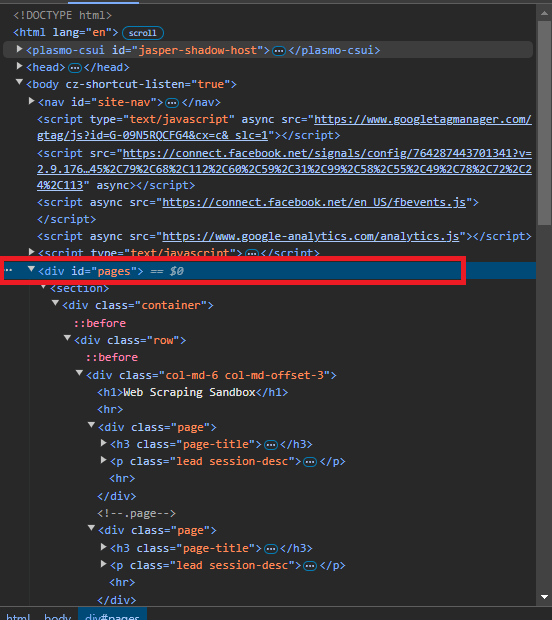

Let's take for example this website, especially the element below <div id="pages"> ...</div>

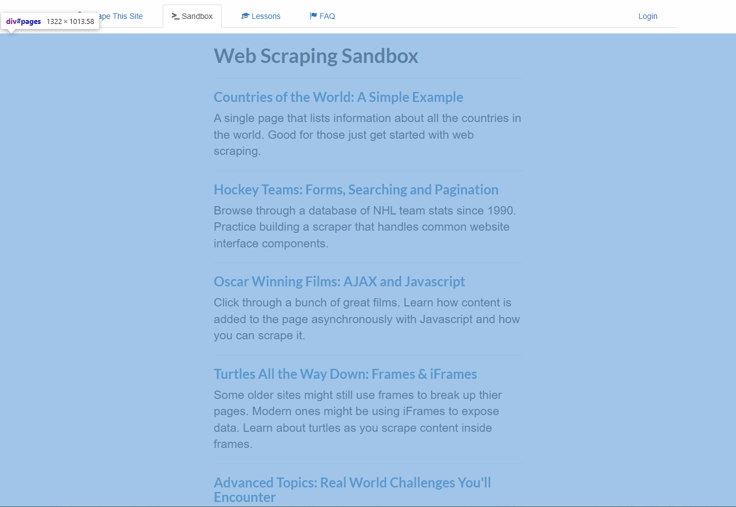

This element contains other nested HTML elements but the main thing is that this element is unique on this website and we can take advantage of this scenario for example when we want to scrape particular sections of the website. In this case this element includes some other articles that we will explain with the other selectors below. Here how this section on the page looks like:

Let's explore a straightforward example using Python's "requests" and "bs4" libraries:

import requests

from bs4 import BeautifulSoup

# Step 1: Send a GET request to the website

url = "https://www.scrapethissite.com/pages/"

response = requests.get(url)

if response.status_code == 200:

# Step 2: Parse the HTML content with BeautifulSoup

soup = BeautifulSoup(response.text, 'html.parser')

# Step 3: Find the div with id="pages"

pages_div = soup.find("div", id="pages")

# Step 4: Display the content or handle it as needed

if pages_div:

print("Content of the div with id='pages':")

print(pages_div.text.strip())

else:

print("No div with id='pages' found.")

else:

print(f"Failed to retrieve the webpage. Status code: {response.status_code}")

Explanation:

- Send a Request: The requests library sends a GET request to fetch the HTML content from the target URL.

- Parse HTML: BeautifulSoup parses the HTML, allowing us to search through the document structure.

- Find Specific <div>: We use

soup.find("div", id="pages")to locate the<div>element withid="pages". - Display Content: If the

<div>is found, we print its content. If not, a message indicates it’s missing.

Limitations of ID Selectors:

ID selectors are powerful but have limitations. Dynamic IDs that change with each page load can make consistent data extraction difficult. In these situations, using alternative selectors may be necessary for reliable results.

Class Selectors

Class selectors are flexible because they let you target groups of elements that share the same class. This makes them essential for web pages with recurring elements. For instance, a website displaying a list of products may assign the same class to each product item.

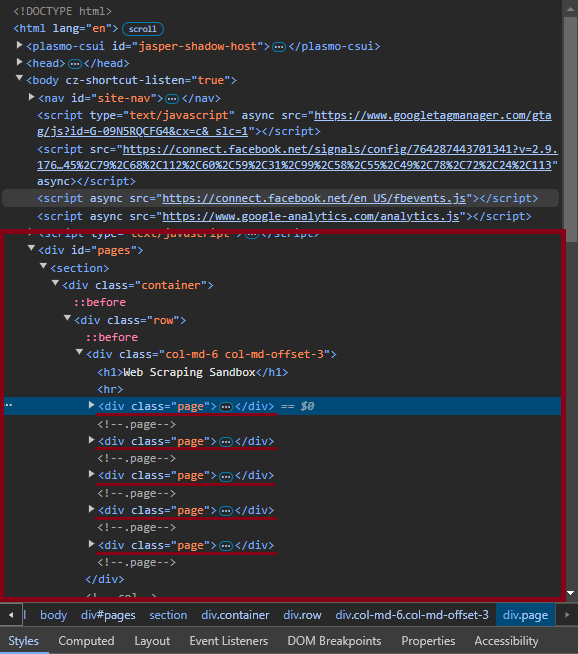

Let's take an example again using this website. Above we identified a <div id="pages"> element using ID Selector and in this div elements there are some articles that have the same class.

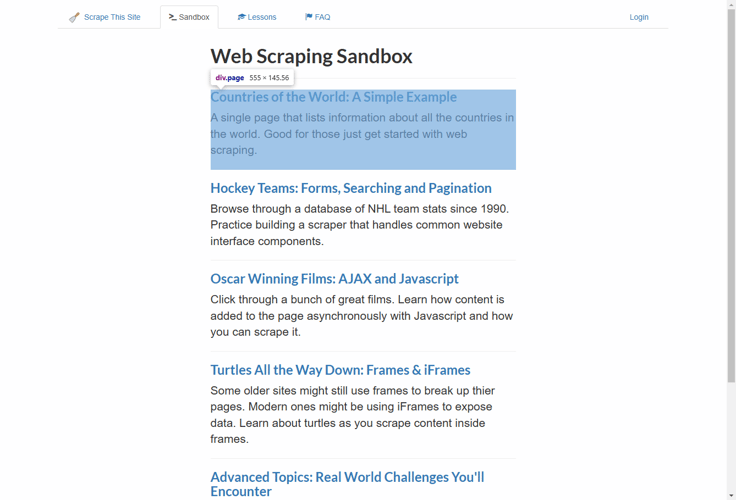

As you can see we have four elements with the same class <div class="page">

Here's how they look on the website:

In the code below, we will select all elements with the class "page", which will return a list that can be used for further parsing.

import requests

from bs4 import BeautifulSoup

# Step 1: Send a GET request to the website

url = "https://www.scrapethissite.com/pages/"

response = requests.get(url)

if response.status_code == 200:

# Step 2: Parse the HTML content with BeautifulSoup

soup = BeautifulSoup(response.text, 'html.parser')

# Step 3: Find all elements with class="page"

page_elements = soup.find_all("div", class_="page")

# Step 4: Save each element's text content in a list

pages_list = [page.text.strip() for page in page_elements]

print("Content of elements with class 'page':")

for i, page in enumerate(pages_list, start=1):

print(f"Page {i}:")

print(page)

print("-" * 20)

else:

print(f"Failed to retrieve the webpage. Status code: {response.status_code}")

Explanation:

- Send a Request: We use requests to send a GET request to the URL, retrieving the HTML content of the webpage.

- Parse HTML with BeautifulSoup: If the request is successful, BeautifulSoup parses the HTML, enabling us to search and interact with elements.

- Find Elements by Class: We use

soup.find_all("div", class_="page")to locate all<div>elements with the class "page", returning them as a list. - Save to List: We extract and clean each element’s text content, saving it in a list called pages_list.

Limitations of Class Selectors

When using class selectors, be mindful of potential issues such as selecting unintended elements. Multiple classes on a single element may require additional filtering to achieve accurate targeting.

Attribute Selectors

Attribute selectors allow you to target elements based on the presence, value, or partial value of specific attributes within HTML tags. This is particularly helpful when classes or IDs are not unique or when you need to filter elements with dynamic attributes, such as data-* or href values in links.

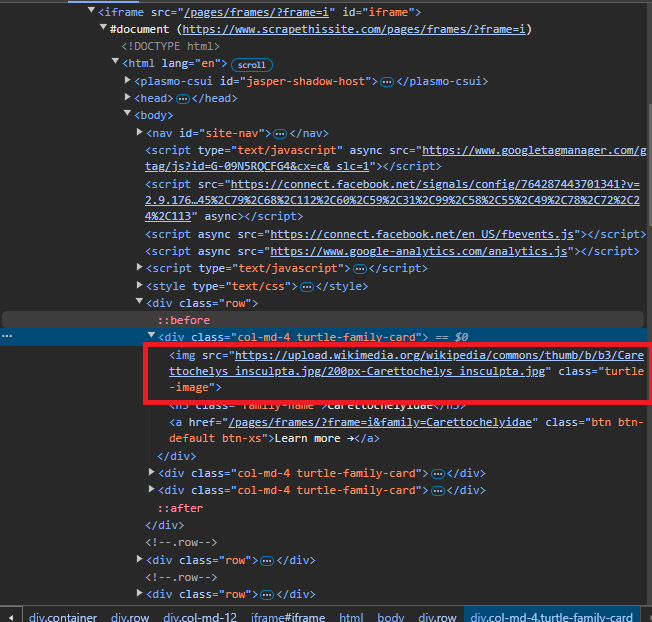

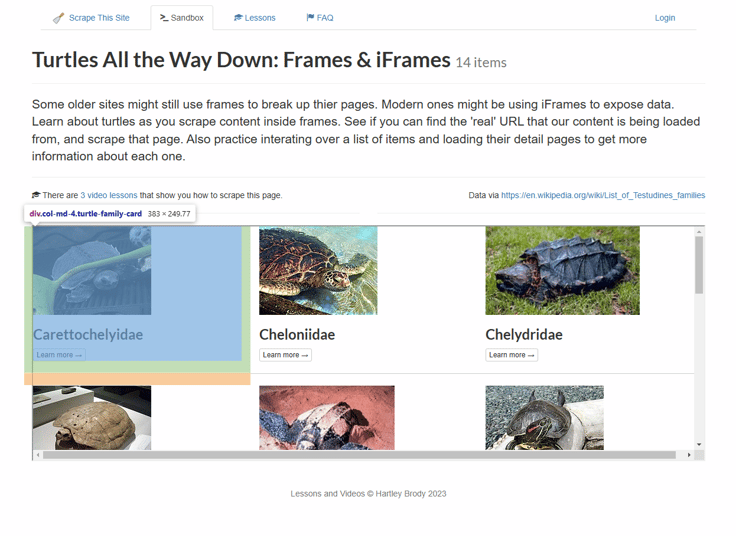

In the following example, we will select all images on the this webpage and extract their source URLs or src attributes. This is how the element looks in the html structure and webpage view:

In the following code, we utilize BeautifulSoup to parse all <img> elements, extracting their src attributes and storing them in a list.

import requests

from bs4 import BeautifulSoup

# Step 1: Send a GET request to the website

url = "https://www.scrapethissite.com/pages/frames/"

response = requests.get(url)

if response.status_code == 200:

# Step 2: Parse the HTML content with BeautifulSoup

soup = BeautifulSoup(response.text, 'html.parser')

# Step 3: Find all <img> elements with a 'src' attribute

image_elements = soup.find_all("img", src=True)

# Step 4: Save the 'src' attributes in a list

images_list = [img['src'] for img in image_elements]

print("Image sources found on the page:")

for i, src in enumerate(images_list, start=1):

print(f"Image {i}: {src}")

else:

print(f"Failed to retrieve the webpage. Status code: {response.status_code}")

Limitations of Class Selectors

Attribute selectors can only select elements with static attributes, making them less effective for dynamic content, like elements loaded through JavaScript. They depend on stable HTML structures, so frequent website layout changes can disrupt them. Also, they can’t manage complex filtering or multiple conditions, which limits their precision. They might also pick up unintended elements if attributes like class or name are shared by multiple elements.

Hierarchical Selectors

Hierarchical selectors allow you to target HTML elements based on their position and relationship to other elements in the HTML structure. This approach is particularly useful when working with tables or nested lists, where data is organized in a parent-child format.

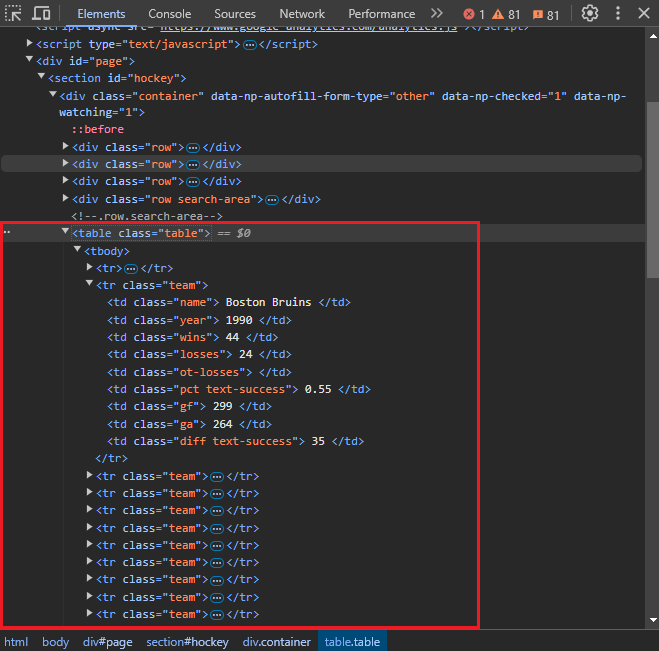

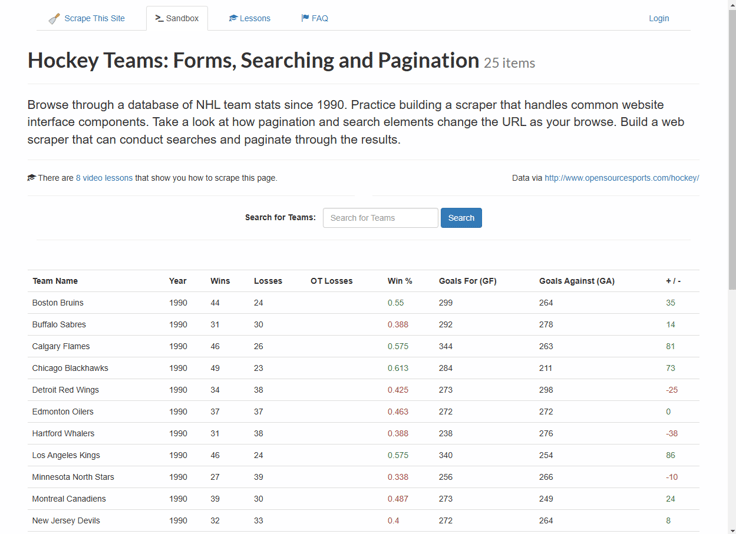

In this example, we’re using hierarchical selectors to scrape data from a table of hockey team statistics found on this webpage.

The table contains rows <tr> representing each team, and each row contains cells <td> with information such as team name, year, wins, and losses. Each row has the class="team", identifying it as a relevant entry in our data. By navigating from the <table> to each <tr> and then to each <td>, we can efficiently capture the data in a structured way.

Below, you’ll find two images to help you visualize where this table is located in the HTML structure and how it appears on the actual webpage.

Now, let’s look at the code below to see how hierarchical selectors can be used to extract this data:

import requests

from bs4 import BeautifulSoup

url = "https://www.scrapethissite.com/pages/forms/"

# Step 1: Send a GET request to the website

response = requests.get(url)

if response.status_code == 200:

# Step 2: Parse the HTML content with BeautifulSoup

soup = BeautifulSoup(response.text, 'html.parser')

# Step 3: Find all rows in the table with class="team"

teams_data = []

team_rows = soup.find_all("tr", class_="team")

# Step 4: Extract and store each team's data

for row in team_rows:

team = {

"name": row.find("td", class_="name").text.strip(),

"year": row.find("td", class_="year").text.strip(),

"wins": row.find("td", class_="wins").text.strip(),

"losses": row.find("td", class_="losses").text.strip(),

"ot_losses": row.find("td", class_="ot-losses").text.strip(),

"win_pct": row.find("td", class_="pct").text.strip(),

"goals_for": row.find("td", class_="gf").text.strip(),

"goals_against": row.find("td", class_="ga").text.strip(),

"goal_diff": row.find("td", class_="diff").text.strip(),

}

teams_data.append(team)

# Step 5: Display the extracted data

for team in teams_data:

print(team)

else:

print(f"Failed to retrieve the webpage. Status code: {response.status_code}")

Limitations of Hierarchical Selectors

Hierarchical selectors are dependent on the HTML structure, so changes in layout can easily break the scraping script. They are also limited to static content and cannot access elements loaded dynamically by JavaScript. These selectors often require precise navigation through parent-child relationships, which can be challenging in deeply nested structures. Additionally, they can be inefficient when extracting scattered data, as they must traverse multiple levels to reach specific elements.

Using Combined Selectors for Better Targeting

Each selector type serves a unique purpose, and combining them allows us to navigate and capture data accurately from nested or structured content. For example, using an ID selector can help locate the main content area, class selectors can isolate repeated elements, attribute selectors can extract specific links or images, and hierarchical selectors can reach elements nested within specific sections. Together, these techniques provide a powerful approach for scraping structured data.

import requests

from bs4 import BeautifulSoup

# Target URL

url = "https://www.scrapethissite.com/pages/"

response = requests.get(url)

if response.status_code == 200:

# Step 2: Parse the HTML content with BeautifulSoup

soup = BeautifulSoup(response.text, 'html.parser')

# Use ID selector to find the main content

main_content = soup.find(id="pages")

# Use class selector to find each "page" section

pages = main_content.find_all("div", class_="page") if main_content else []

# Extract details from each "page" section using hierarchical selectors

for page in pages:

# Use hierarchical selector to find title link and URL within each "page"

title_tag = page.find("h3", class_="page-title")

title = title_tag.text.strip() if title_tag else "No Title"

link = title_tag.find("a")["href"] if title_tag and title_tag.find("a") else "No Link"

# Use class selector to find the description

description = page.find("p", class_="lead session-desc").text.strip() if page.find("p", class_="lead session-desc") else "No Description"

print(f"Title: {title}")

print(f"Link: {link}")

print(f"Description: {description}")

print("-" * 40)

else:

print(f"Failed to retrieve the webpage. Status code: {response.status_code}")

Explanation of the Code

- ID Selector: We start by locating the main content area with id="pages", which holds the sections we need.

- Class Selector: Inside this main area, we use

class="page"to find each individual content block representing a section of interest. - Hierarchical Selectors: Within each "page" block, we use:

page.find("h3", class_="page-title")to find the title.title_tag.find("a")["href"]to retrieve the link URL from the anchor tag in the title.

- Attribute Selector: We access the href attribute of each link to capture the exact URLs associated with each section.

- Output: The script prints each section’s title, link, and description, giving a structured view of the data scraped from the page.

Conclusion

In web scraping, knowing how to use HTML selectors can greatly improve your data extraction skills, allowing you to gather important information accurately. Selectors like ID, class, attribute, and hierarchical selectors each have specific uses for different scraping tasks. By using these tools together, you can handle a wide range of web scraping challenges with confidence.

For practice, sites like Scrape This Site and Books to Scrape offer great examples to help you refine your skills. And if you need any help or want to connect with others interested in web scraping, feel free to join our Discord channel at https://discord.com/invite/scrape.

Happy scraping!