When you scrape data from large-scale websites, it is least likely that you didn’t have to face a CAPTCHA to prove that you’re a human. As a web scraper, you may already know why cybersecurity professionals were forced to invent them. They were a result of your bots automating endless website requests to access them.

When you scrape data from large-scale websites, it is least likely that you didn’t have to face a CAPTCHA to prove that you’re a human. As a web scraper, you may already know why cybersecurity professionals were forced to invent them. They were a result of your bots automating endless website requests to access them. So even genuine users had to go through the pains of confronting CAPTCHAs which appear in different forms. However, you can bypass CAPTCHAs whether you’re a web scraper or not, which would be this article’s objective. But first, let’s dive into what CAPTCHAs are.

- Bypass CAPTCHA For Web Scraping

- What is a CAPTCHA?

- How do CAPTCHAs operate?

- What is a ReCAPTCHA?

- How do ReCAPTCHAs work?

- What triggers CAPTCHAs and ReCAPTCHAs?

- Things to know about bypassing CAPTCHAs when web scraping

- How to bypass CAPTCHAs for Web Scraping

- Frequently Asked Questions

- Conclusion

Bypass CAPTCHA For Web Scraping

CAPTCHAs are usually triggered to detect unnatural traffic in the site. So, this can possibly interrupt scrapers while extracting data in huge numbers.To bypass this restriction, users prefer for a solution that can possibly crack all these CAPTCHA codes and access website like a real human being. One solution to bypass captchas is Capsolver. Utilizing proxies with the web requests will also aid users to appear as a natural traffic.

What is a CAPTCHA?

CAPTCHA stands for Completely Automated Public Turing Test to tell Computers and Humans Apart. That’s a pretty long acronym, isn’t it? Now you may be wondering what the last part of this acronym, Turing Test means – well, it is a simple test to determine whether a human or bot is interacting with a web page or web server.

After all, a CAPTCHA differentiates humans from bots, helping Cyber security analysts safeguard web servers from brute force attacks, DDoS, and in some situations, web scraping.

Let’s find out how CAPTCHAs differentiate humans from bots.

How do CAPTCHAs operate?

You can find the CAPTCHAs in the forms of a website, including contact, registration, comments, sign-up, or check-out forms.

Traditional CAPTCHAs include an image with stretched or blurred letters, numbers, or both in a box with a background color or transparent background. Then you have to identify the characters and type them in the text field that follows. This process of identifying characters is easier for humans but somewhat complicated for a bot.

The idea of blurring or distorting the CAPTCHA text is to make it harder for the bot to identify the characters. In contrast, human beings can interpret and intercept characters in various formats, such as different fonts, handwriting, etc. Having said that, not every human can solve a CAPTCHA on the first attempt. According to research, 8% of the users will mistype on their first attempt, while 29% fail if the CAPTCHAs are case-sensitive.

On the other hand, some advanced bots can intercept distorted letters with the assistance of machine learning over the years. As a result, some companies such as Google replaced conventional CAPTCHAs with sophisticated CAPTCHAs. One such example is ReCAPTCHA which you will discover in the next section.

What is a ReCAPTCHA?

ReCAPTCHA is a free service that Google offers. It asks the users to tick boxes rather than typing text, solving puzzles, or math equations.

A typical ReCAPTCHA is more advanced than conventional forms of CAPTCHAs. It uses real-world images and texts such as traffic lights in streets, texts from old newspapers, and printed books. As a result, the users don’t have to rely on old-school CAPTCHAs with blurry and distorted text.

How do ReCAPTCHAs work?

There are three significant types of ReCAPTCHA tests to verify whether you’re a human being or not:

Checkbox

These are the ReCAPTCHAs that request the users to tick a checkbox, “I’m not a robot” like in the above image. Although it may seem to the naked eye that even a bot could complete this test, several factors are taken into account:

- This test investigates the user’s mouse movements as it approaches the check box.

- A user’s mouse movements are not straight, including most direct mouse movements. It is challenging for a bot to mimic the same behavior.

- Finally, the ReCAPTCHA would inspect the cookies that your browser stores.

If the ReCAPTCHA fails to verify that you’re a human, it will present you with another challenge.

Image Recognition

These ReCAPTCHAs provide users with nine or sixteen square images as you can see in the above image. Each square represents a part of a larger image or different images. A user must select squares representing specific objects, animals, trees, vehicles, or traffic lights.

If the user’s selection matches the selections of other users who have performed the same test, the user is verified. Otherwise, the ReCAPTCHA will present a more challenging test.

No Interaction

Did you know that ReCAPTCHA can verify whether you’re a human or not without using checkboxes or any user interactions?

It certainly does by considering the user’s history of interacting with websites and the user’s general behavior while online. In most scenarios, upon these factors, the system would be able to determine if you’re a bot.

Failure to do so would revert to any of the two previously mentioned methods.

What triggers CAPTCHAs and ReCAPTCHAs?

CAPTCHAs can be triggered if a website detects unusual activities resembling bot behavior; Such unusual behavior includes unlimited requests within split seconds and clicking on links at a far higher rate than humans.

Then some websites would automatically have CAPTCHAs in place to shield their systems.

As far as the ReCAPTCHAs are concerned, it is not exactly clear what triggers them. However, general causes are mouse movements, browsing history, and tracking of cookies.

Things to know about bypassing CAPTCHAs when web scraping

Now you have a clear overview of what CAPTCHAs and Rechaptchas are, how they operate, and what triggers them. Now it’s time to look into how CAPTCHAs affect web scraping.

CAPTCHAs can hinder scraping the web as the automated bots carry out most of the scraping operations. However, do not get disheartened. As mentioned at the beginning of this article, there are ways to overcome CAPTCHAs when scraping the web. Before we get to them, let’s dive our attention to what you need to be aware of before you scrape.

Sending too many requests to the target website

First of all, you must ensure that you do not allow your web scraper/crawler to send too many requests in a short period. Most of the websites have mentioned in their terms and conditions pages how many requests the website allows. Make sure to read them before starting scraping.

HTTP headers

When you connect to a website, you send information about your device to the connecting website. They may use this information to customize content to the specifications of your device and metric tracking. So when they find out that the requests are from the same device, any request you send afterward will get blocked.

So, if you have developed the web scraper/crawler on your own, you would be able to change the header information for each request that your scraper makes. Then it would appear to the target website as it is receiving multiple requests from different devices. Read here for more information about HTTP headers.

IP address

Another fact you should be aware of is that the target website has not blacklisted your IP address. They’re likely to blacklist your IP address when you send too many requests with your scraper/crawler.

To overcome the above issue, you can use a proxy server as it masks your IP address.

Rotating the HTTP headers and proxies (more on this in the next section) with a pool will ensure that multiple devices access the website from different locations. So you should be able to continue scraping without interruption from CAPTCHAs. Having said you must ensure you’re not harming the performance of the website by any means.

However, you need to note that proxies will not help you overcome CAPTCHAs in the registration, password change,check-out forms, etc. It can only help you to overcome captures that websites trigger due to having bot behavior. To avoid CAPTCHAs in such forms, we would look into CAPTCHA solvers in a forthcoming section.

Other types of CAPTCHAs

In addition to the above key factors, you need to know the CAPTCHAs below when web scraping with a bot:

Honeypots-Honeypot will be a type of CAPTCHA enclosed in an HTML form field or link, but its visibility is hidden with CSS.So when a bot interacts with it has inevitably reported itself to be a bot. So before making your bot scrape the content, make sure that the element’s CSS properties are visible.

Word/Match CAPTCHA-These are the CAPTCHAs in math equations like solving “3+7,” for instance. There can also be word puzzles to solve as well.

Social Media sign-in – Some websites require you to sign in with your Facebook account, for example. However, they are not popular as most administrators know that people would be reluctant to sign them with their social media accounts.

Time tracking– These CAPTCHAs monitor how fast you carry out a specific action, such as filling out a form to determine if it’s a human or a bot.

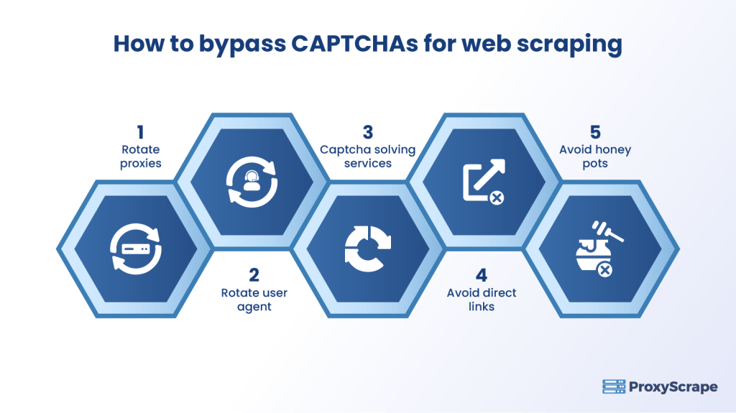

How to bypass CAPTCHAs for Web Scraping

Rotate proxies & use quality IP addresses

As mentioned in the previous section, you need to rotate proxies each time you send a request to the target website. It is one way to avoid CAPTCHAs that trigger while you scrape. In these circumstances, you need to use clean residential IP proxies.

When you rotate the proxies, it would be difficult for the target website to determine your IP footprint. This is because, for each request, the proxies’ IP address would appear rather than your own.

Rotate User agents

Since you will be using a scraper for web scraping, you will need to disguise the user agent to a popular web browser or supported bot—bots such as search engine bots that websites recognize.

Merely changing the user agent will not be sufficient as you will need to have a list of user-agent strings and then rotate them. This rotation will result in the target website seeing you as a different device when in reality, one device is sending all the requests.

As a best practice for this step, it would be great to keep a database of real user agents. Also, delete the cookies when you no longer need them.

CAPTCHA Solving Services

A more straightforward low technical method to solve a CAPTCHA would be to use a CAPTCHA-solving service. They use Artificial Intelligence (AI), Machine Learning (MI), and a culmination of other technologies to solve a CAPTCHA.

Some of the prominent CAPTCHA solvers currently existing in the industry are Capsolver and Anti-CAPTCHA.

Avoid direct links

When you let your scraper directly access a URL every split second, then the receiving website would be suspicious. As a result, the target website would trigger a CAPTCHA.

To avoid such a scenario, you could set the referer header to make it appear to be referred from another page. It would reduce the likelihood of getting detected as a bot. Alternatively, you could make the bot visit other pages before visiting the desired link.

Avoid Honeypots

Honeypots are hidden elements on a webpage that security experts use to trap bots or intruders. Although the browser renders its HTML, its CSS properties are set to hide. However, unlike humans, the honey pot code would be visible to the bots when they scrape the data. As a result, they fell into the trap set by the honeypot.

So you have to make sure that you check the CSS properties of all the elements in a web page are not hidden or invisible before you commence scraping. Only when you’re certain that none of the elements are hidden, do you set your bot for scraping.

Frequently Asked Questions

FAQs:

1. What does bypassing CAPTCHAs for Web Scraping mean?

2. What is ReCaptcha?

3. How will a proxy help users in bypassing Captchas?

Conclusion

This article would have given you have a comprehensive idea of how to avoid CAPTCHAs while scraping the web. Avoiding a CAPTCHA can be a complicated process. However, with the use of specific techniques discussed in this article, you can develop the bot in such a way as to avoid CAPTCHAs.

We hope you “ll make use of all the techniques discussed in this article.